Last Updated: November 30, 2025

Large Language Model Concept Diagram

1. Key Takeaways

Large Language Models (LLMs) are AI systems trained on massive datasets to understand and generate human-like text.

Nearly all LLMs are built on transformers, which use self-attention to process sequences efficiently.

LLMs can summarize, write, translate, answer questions, generate code, and reason across long documents.

Their capabilities scale with data, parameters, and compute—leading to dramatic jumps from GPT-2 → GPT-3 → GPT-4 → GPT-4.1.

LLMs have become the core of enterprise AI tools, powering automation, analytics, customer service, and content generation.

Table of Contents

2. What Is a Large Language Model (LLM)?

A Large Language Model (LLM) is an advanced AI system trained to read, write, understand, and generate human language. LLMs are built on transformer architectures, which allow them to analyze relationships between words, sentences, and concepts across massive amounts of text.

Modern LLMs can:

answer questions

write essays and emails

summarize long documents

translate languages

generate code

analyze legal or financial text

solve reasoning tasks

engage in multi-turn conversation

The term “large” refers to model size: LLMs generally contain billions to hundreds of billions of parameters—the numbers the model adjusts during training to learn patterns from data.

3. How Large Language Models Work

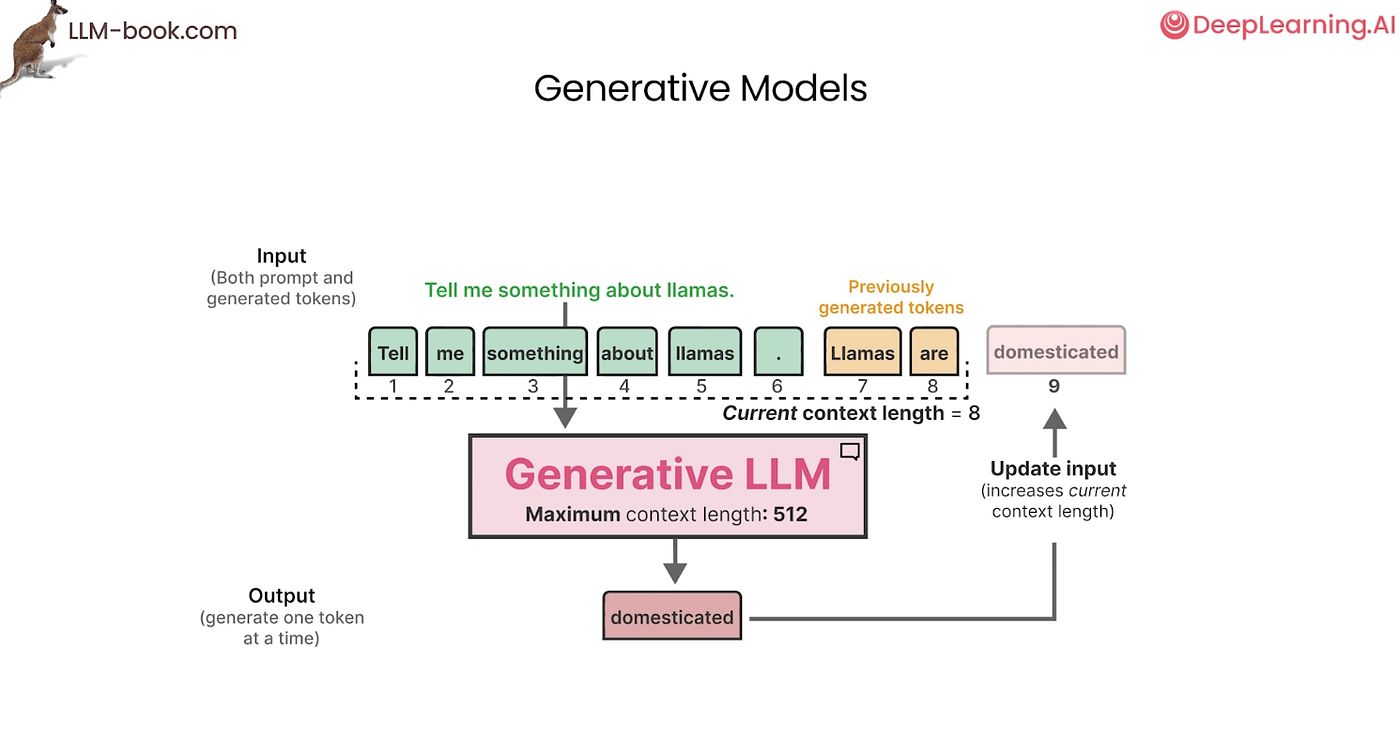

LLMs operate by converting text into tokens, processing those tokens through transformer layers, and generating output one token at a time.

Tokenization

Text is broken down into small chunks (tokens) such as words, subwords, or characters.

Embedding

Each token is converted into a vector—a numeric representation of meaning.

Transformer Layers

The model uses self-attention to determine which tokens matter most. Feed-forward layers refine the meaning further.

Prediction & Generation

The model predicts the next token based on all previous tokens, generating text step-by-step until completion.

Reinforcement & Fine Tuning

Training techniques like RLHF (Reinforcement Learning from Human Feedback) refine the model’s outputs to be safer, more helpful, and more accurate.

This pipeline allows LLMs to perform complex reasoning, maintain context across long conversations, and generate coherent, natural text.

4. Why LLMs Became So Powerful

Large Language Models exploded in capability due to three breakthroughs:

Transformers unlocked parallel processing.

Unlike RNNs and LSTMs, transformers analyze all tokens at once, making training dramatically faster.

Scaling laws revealed predictable improvement.

More compute + more data + larger model = better performance.

Massive datasets taught broad world knowledge.

LLMs train on:

books

articles

websites

code

academic papers

dialogue transcripts

This combination allowed models like GPT-4, Claude Opus, and Gemini Ultra to surpass human-level performance in many text-based tasks.

5. Real-World Applications of LLMs

LLMs now power critical workflows across industries.

Customer Support

AI chatbots resolve tickets, classify queries, and handle multi-turn conversations.

Search Engines

Transformers analyze intent, refine relevance, and generate summaries.

Content Generation

Marketing teams use LLMs for emails, articles, product descriptions, and ads.

Software Development

Models like Copilot write code, explain functions, and detect bugs.

Healthcare

LLMs summarize clinical notes, draft reports, and assist with documentation.

Finance

Used for risk analysis, compliance, fraud detection, and regulatory extraction.

Legal

Contract review, case summarization, and clause extraction.

Analytics & Business Intelligence

Natural-language queries:

“What were Q3 margins by region?”

“What customer segments grew the fastest?”

LLMs have become a universal interface for tasks involving language, rules, or reasoning.

6. How Large Language Models Are Trained

LLMs learn by predicting missing words in text. With enough data and compute, they learn grammar, facts, reasoning patterns, and representations of concepts.

📊 TABLE 1 — Training Stages of an LLM

(Insert this table directly under the training intro)

Training Stage | Description | Purpose |

|---|---|---|

Pretraining | Model learns from trillions of tokens using next-word prediction. | Builds general linguistic + world knowledge. |

Fine-Tuning | Developers train the model on domain-specific tasks. | Specializes the model (legal, medical, coding). |

Instruction Tuning | Model learns to follow natural-language instructions. | Makes responses helpful, clear, safe. |

RLHF | Humans rank outputs; model is tuned for quality. | Aligns model with human preferences. |

Continual Training | Model adapts to new corporate or private data. | Keeps models updated + domain-relevant. |

Training costs are high—state-of-the-art LLMs cost millions to tens of millions of dollars in GPU compute.

7. Types of LLM Architectures

LLMs generally fall into one of three categories:

📊 TABLE 2 — LLM Architecture Comparison

Architecture Type | Description | Best Use Cases | Examples |

|---|---|---|---|

Decoder-Only | Generates text one token at a time. | Chatbots, writing, coding, reasoning. | GPT-4, Claude, Gemini, Llama. |

Encoder-Only | Transforms text into embeddings. | Search, classification, semantic tasks. | BERT, RoBERTa. |

Encoder-Decoder | One model encodes, another generates. | Translation, summarization, structured output. | T5, FLAN. |

Decoder-only transformers dominate modern generative AI, but encoder-only models remain essential for search and semantic tasks.

8. Limitations of Large Language Models

Despite their power, LLMs have several limits:

Hallucination

Models sometimes generate incorrect but convincing information.

Context Window Limits

Even with 100k–1M token windows, models cannot read unlimited text.

High Compute Costs

Training frontier models requires massive GPU clusters.

Latency

Large models may respond slowly without optimizations.

Lack of True Understanding

LLMs identify patterns rather than possessing human-like reasoning.

Data Sensitivity

They may struggle in highly specialized domains without fine-tuning.

9. The Future of LLMs

The next evolution of LLMs will focus on:

Mixture of Experts (MoE)

Activating only parts of the model per request to reduce cost.

State-Space Models (SSMs)

Architectures like Mamba that handle extremely long context windows.

AI Agents

LLMs that not only answer but take actions across tools and systems.

Domain-Specific Small Models

3B–10B parameter models fine-tuned for specific industries.

Multimodal Models

LLMs that understand text, images, audio, video, and structured data.

Enterprise Integration

Embedding LLMs into analytics, IT operations, customer support, and automation workflows.

10. Glossary

LLM: Large Language Model trained on massive datasets to understand and generate text.

Token: Small unit of text such as a word or subword.

Transformer: Neural architecture using self-attention.

Pretraining: Large-scale unsupervised training step.

Fine-Tuning: Task-specific or domain-specific training.

Context Window: Maximum input length an LLM can process.

MoE: Mixture of Experts model architecture.

RLHF: Reinforcement Learning from Human Feedback.

11. Frequently Asked Questions

Are LLMs transformers?

Yes—almost all modern LLMs use transformer architectures.

How big are LLMs?

Ranges from 3B parameters to 400B+ for frontier models.

Are LLMs trained on the whole internet?

No. They use curated subsets of public and licensed data.

Do LLMs think like humans?

No—they detect patterns, not human-like reasoning.

Can LLMs learn my company’s data?

Yes, through secure fine-tuning or private embedding pipelines.

Will LLMs replace jobs?

They will automate tasks, augment workers, and reshape workflows.

12. Want Daily AI News in Simple Language?

If you enjoy expert guides like this, subscribe to AI Business Weekly — the fastest-growing AI newsletter for business leaders.

👉 Subscribe to AI Business Weekly

https://aibusinessweekly.net