Last Updated: November 30, 2025

Embedding Vector Space Example

1. Key Takeaways

Embeddings convert words, sentences, images, or audio into numbers called vectors.

These vectors capture meaning, allowing AI systems to understand similarity.

Embeddings power RAG, search engines, ChatGPT memory, recommendations, and vector databases.

The closer two embeddings are in vector space, the more similar they are in meaning.

Modern AI heavily depends on embeddings to organize knowledge efficiently.

Table of Contents

2. What Are Embeddings?

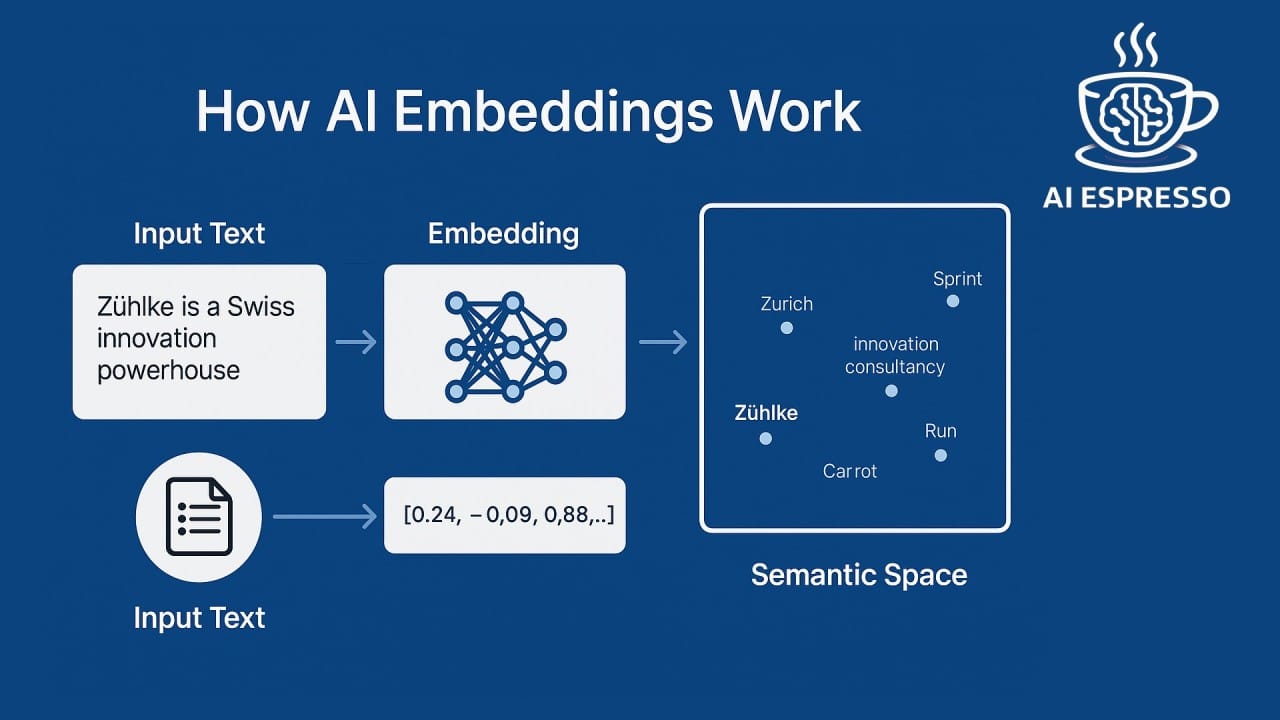

Embeddings are high-dimensional numerical representations of data. Instead of storing raw words or images, AI converts them into vectors — long lists of numbers — that represent the underlying meaning.

Example:

“car” and “vehicle” → vectors close together

“car” and “banana” → vectors far apart

This numerical representation allows machines to:

understand similarity

find relationships

categorize data

search based on meaning

retrieve relevant information

generalize concepts

Embeddings form the semantic foundation of modern AI.

Whether you ask ChatGPT a question, search Google, receive a product recommendation, or upload a photo — embeddings are working behind the scenes.

3. Why Embeddings Matter

Embeddings solve a major problem:

Computers do not understand language — only numbers.

To operate on meaning, AI models need a mathematical representation of:

words

documents

images

audio

user behavior

products

actions

Embeddings enable AI systems to compare concepts with precision.

This is why embeddings are central to:

Semantic Search

Search engines match meaning, not keywords.

LLMs like ChatGPT

Embeddings help understand intent, context, memory, and retrieval.

RAG (Retrieval-Augmented Generation)

Queries and documents are embedded and compared for similarity.

Recommendation Systems

Netflix, Amazon, Spotify rely on embeddings for personalization.

Fraud Detection

Transactions become vectors for anomaly detection.

Computer Vision

Images and objects are embedded for recognition.

Embeddings allow AI to behave intelligently across every domain.

4. How Embeddings Work

Embeddings are produced by encoder models — often transformer networks — that compress information into dense vectors.

How the process works:

Input Data

Text, image, audio, or document enters the model.Neural Encoding

A deep neural network analyzes the meaning, patterns, and context.Vector Output

The final layer produces a vector of numbers (e.g., 768, 1024, 3072 dimensions).Normalization

Vectors are standardized so comparisons are fair.Similarity Computation

Using cosine similarity or dot product, the AI determines how close two vectors are.

Example:

Sentence A:

“AI is transforming business.”

Sentence B:

“Artificial intelligence is changing companies.”

Even though the phrasing differs, their embeddings will be very close, because they convey the same meaning.

This is what lets AI understand and retrieve context accurately.

5. Types of Embeddings

Embeddings vary depending on what kind of data they represent.

Below is a table showing the most common types used today:

📊 TABLE 1 — Common Embedding Types

Embedding Type | What It Represents | Real Use Cases |

|---|---|---|

Text Embeddings | Words, sentences, documents | Search, RAG, chatbots |

Image Embeddings | Photos, objects | Vision models, recognition |

Audio Embeddings | Speech, sound | Voice assistants, audio search |

Video Embeddings | Clips, frames | Surveillance, content analysis |

User Embeddings | Behavior patterns | Personalization & ads |

Product Embeddings | Items, features | Amazon & retail systems |

Multimodal Embeddings | Text + images, etc. | CLIP, Gemini, unified models |

Each type lets AI operate on meaning instead of raw pixels, sounds, or words.

6. Components of Embeddings

Embeddings have several important characteristics:

Dimensionality

Number of numbers in the vector — usually 384 to 4096.

Higher dimensions capture more detail.

Distance Metrics

How similarity is measured:

cosine similarity (most common)

dot product

Euclidean distance

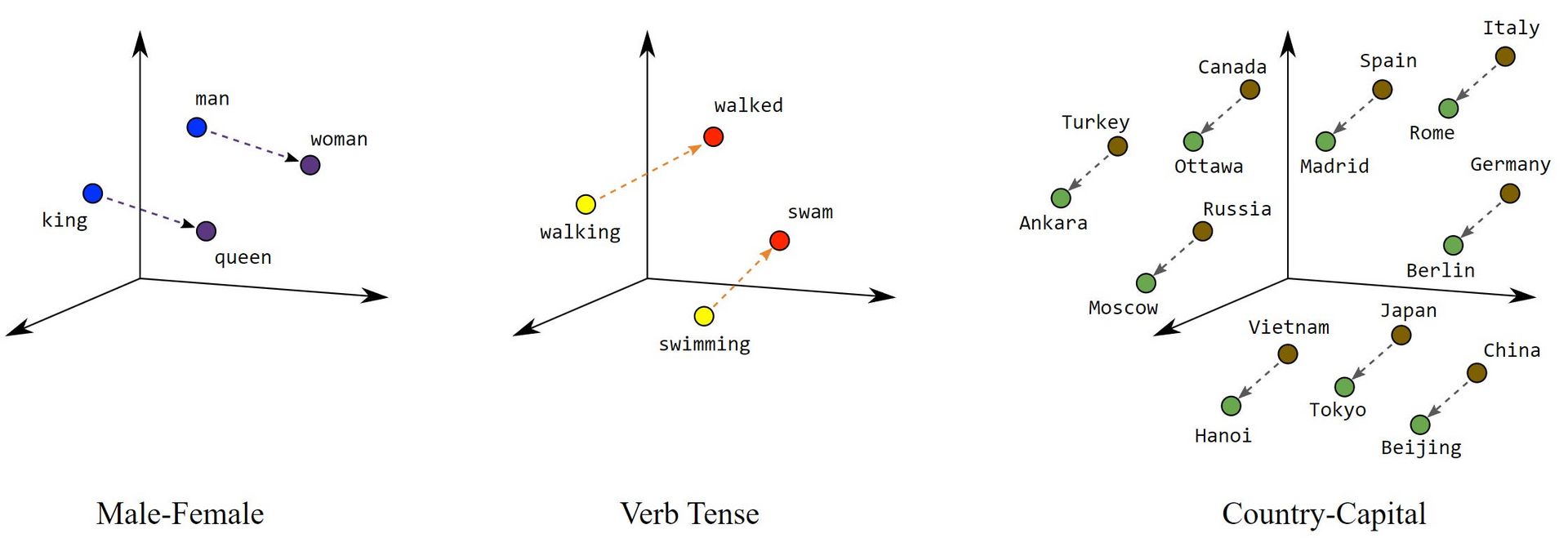

Vector Space

A geometric space where meaning becomes location.

similar meanings form clusters

dissimilar meanings scatter apart

relationships become measurable

Contextual Encoding

Modern embeddings consider surrounding context (“transformers”), making them more accurate.

Normalization

Ensures vectors operate on the same scale.

These components determine how useful embeddings are for search and retrieval.

7. How Embeddings Are Trained

Embeddings are produced using self-supervised learning on massive datasets.

Common training methods:

Contrastive learning: model learns what is similar vs. different

Masked language modeling: predicting missing text

Triplet loss: pushes similar items together, dissimilar ones apart

Multimodal alignment: mapping images and text to the same space

Representation learning: discovering patterns without labels

Embedding Training Workflow

📊 TABLE 2 — Major Embedding Training Approaches

Method | Description | Used By |

|---|---|---|

Contrastive Learning | Compare pairs | OpenAI, Cohere |

Self-Supervised Learning | Predict missing info | Transformers |

Triplet Loss | Anchor/positive/negative | Vision models |

Multimodal Alignment | Match images & text | CLIP, Gemini |

Domain-Specific Tuning | Industry-specific embeddings | Finance, legal, medical |

8. Real-World Applications

Embeddings are used everywhere:

Search Engines

Google and Bing rely on embeddings for semantic search.

ChatGPT / LLMs

Prompt understanding, retrieval, memory, ranking.

RAG Systems

Compare query → document vectors to retrieve facts.

Customer Support AI

Match complaints to past resolutions.

E-commerce

Product similarity, related items, personalization.

Video & Image Recognition

Face ID, object detection, scene classification.

Fraud Detection

Embedding-based anomaly detection.

Healthcare

Medical image comparison, diagnosis retrieval.

Embeddings make AI accurate, scalable, and context-aware.

9. Limitations and Challenges

Despite their power, embeddings are not perfect.

High Dimensionality Costs

Large vectors require expensive storage and computation.

Domain Transfer Issues

General embeddings may underperform in specialized fields.

Bias and Representation Risk

If the training data is biased, embeddings may carry those biases.

Context Drift

Meanings shift over time — embeddings must be updated.

Vector Database Scalability

Billions of vectors require optimized indexing (HNSW, IVF, PQ).

Versioning & Consistency

Using different embedding models causes mismatch in search quality.

Still, embeddings remain the most effective method for semantic understanding in AI.

10. The Future of Embeddings

Several major trends are shaping where embeddings are going next:

Unified Multimodal Embedding Spaces

Models like Gemini embed text, images, audio, and video together.

Smaller, Faster Embeddings

Lightweight models for mobile devices.

LLM Memory Systems

Embeddings become the backbone of personalized long-term memory.

Domain-Specific Embedding Models

Legal, finance, scientific, medical — hyper-accurate specialized spaces.

Temporal Embeddings

Understanding how meaning evolves over time.

World Models

Robotics and self-driving cars rely on 3D embeddings from video streams.

The future of AI is deeply tied to the evolution of embeddings.

Glossary

Embedding — numeric vector representation that captures meaning.

Vector Space — geometric space plotting similarity and distance.

Cosine Similarity — most popular way to measure similarity.

Dimensionality — number of features in an embedding.

Encoder — neural network that generates embedding vectors.

RAG — retrieval-enhanced generation using embeddings.

FAQ

Are embeddings the same as vectors?

Embeddings are vectors, but specifically vectors that encode meaning.

Do embeddings only work for text?

No — images, audio, and user behavior can be embedded too.

How large are embeddings?

Usually 384–4096 dimensions.

Why are embeddings important for RAG?

They enable semantic retrieval by comparing vector similarity.

Subscribe to AI Business Weekly

Daily AI insights delivered simply.

https://aibusinessweekly.net