Last Updated: November 30, 2025

Deep Learning Neural Network Diagram

1. Key Takeaways

Deep learning is a subset of machine learning based on neural networks with many layers (“deep” networks).

It powers nearly all modern AI: LLMs, computer vision, speech recognition, robotics, and self-driving cars.

Deep learning automatically learns features from raw data, eliminating the need for manual feature engineering.

It requires large datasets and GPU compute to train effectively.

Deep learning is the backbone of GPT models, Claude, Gemini, Copilot, and nearly every modern AI system.

Table of Contents

2. What Is Deep Learning?

Deep learning is a branch of machine learning that uses neural networks with multiple layers to analyze data and learn patterns. Unlike traditional machine learning, deep learning can learn directly from raw input — such as text, images, or audio — without extensive human-designed features.

Deep learning powers:

large language models (LLMs)

computer vision

speech recognition

robotics

recommendation systems

fraud detection

autonomous driving

Modern AI is essentially built on deep learning.

3. How Deep Learning Works

Deep learning is built on neural networks, which transform data step-by-step through layers of interconnected “neurons.”

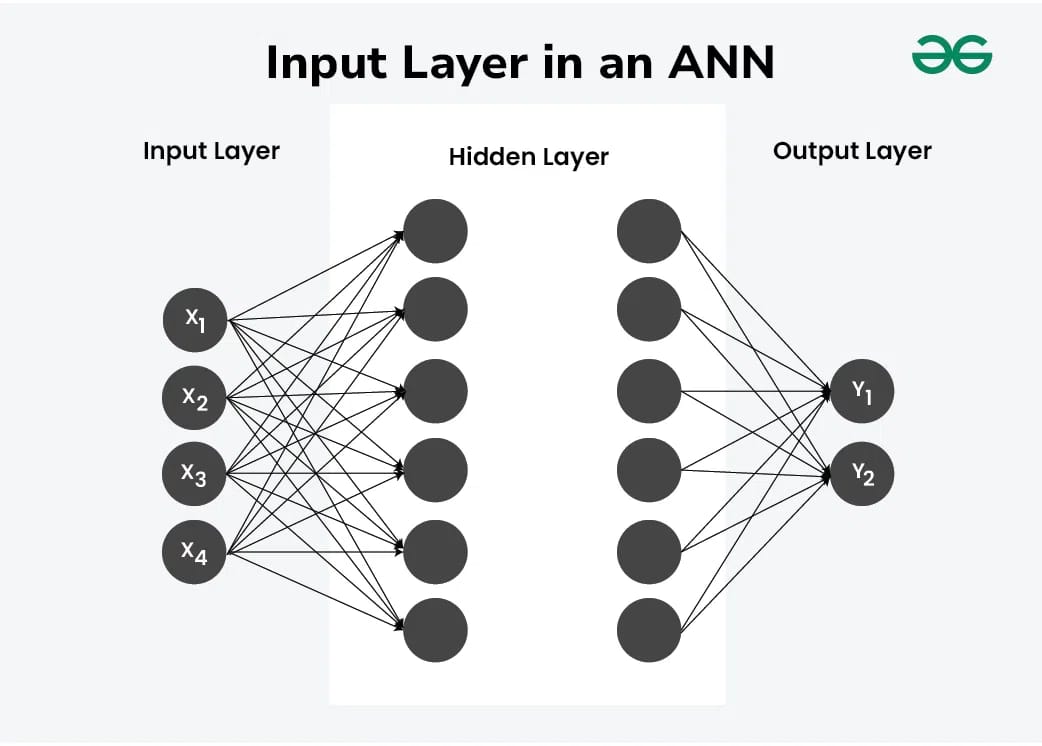

Input Layer

Receives raw data (text, pixels, audio waves, numbers).

Hidden Layers

Extract increasingly complex features.

Output Layer

Produces a prediction (classification, text, detection, etc).

Each connection has a weight, and these weights adjust during training to minimize error.

Why it’s powerful:

Learns features automatically

Handles extremely complex data

Continuously improves with more data

Scales with compute

This structure is what enables LLMs, image models, and advanced AI systems.

4. Deep Learning vs. Traditional Machine Learning

Deep learning differs from traditional machine learning in several important ways.

Traditional ML requires:

manual features

smaller datasets

simpler architectures

Deep learning:

extracts features automatically

scales with massive datasets

supports highly complex tasks

TABLE 1 — Deep Learning vs Machine Learning

Feature | Traditional ML | Deep Learning |

|---|---|---|

Feature Engineering | Manual | Automatic |

Data Requirements | Low–Medium | High |

Training Time | Fast | Slow (heavy compute) |

Interpretability | Easier | Harder |

Best For | Simple problems | Images, text, audio, LLMs |

Scalability | Limited | Very scalable |

5. Neural Networks Explained

At the core of deep learning is the neural network — a layered architecture inspired loosely by the human brain.

A neural network includes:

Neurons

Small computational units that process input.

Weights

Learnable parameters that determine outputs.

Layers

Input → multiple hidden layers → output.

Activation Functions

Non-linear functions that help the model learn complex patterns (ReLU, Sigmoid, Tanh).

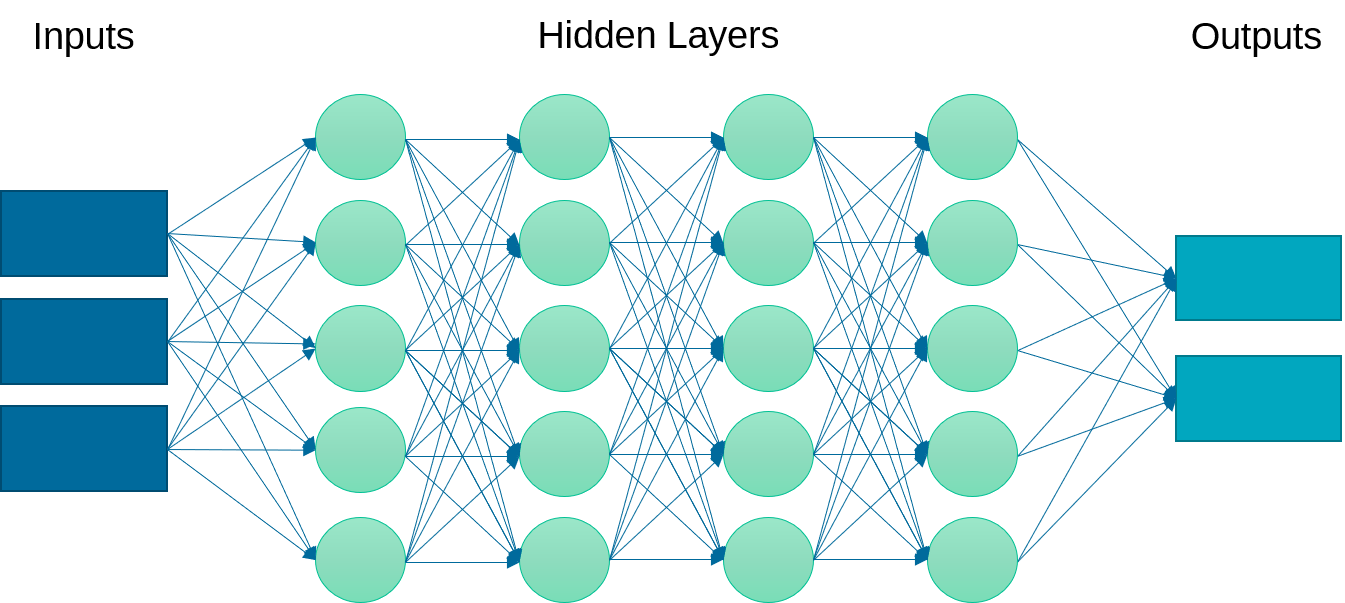

As more layers are added, the model becomes “deep” — capable of learning complex relationships found in images, text, and audio.

Neural networks can learn:

edges → shapes → objects (vision)

letters → words → meaning (language)

patterns → anomalies (finance)

signals → trajectories (autonomous systems)

This hierarchical feature learning is what makes deep learning so effective.

6. Types of Deep Learning Models

Deep learning includes several major architectures, each tailored to specific data types.

Feedforward Neural Networks (FNNs)

Basic layered networks used for simple prediction tasks.

Convolutional Neural Networks (CNNs)

Specialized for image and video analysis.

Recurrent Neural Networks (RNNs)

Used for sequence data (older NLP models).

Transformers

Now the dominant architecture for NLP and multimodal AI.

GPT, Claude, Gemini, and Llama are all transformer-based.

Autoencoders

Used for compression and anomaly detection.

Diffusion Models

Used for modern AI image and video generation.

Each architecture plays a role in today’s AI ecosystem, but transformers now dominate language and multimodal tasks.

Neural Network Layer Diagram

7. How Deep Learning Models Are Trained

Deep learning training involves many cycles of trial and error.

Forward Pass

The model makes predictions.

Loss Calculation

Measures how wrong the prediction was.

Backpropagation

Adjusts weights to reduce future error.

Optimizer Step

Algorithms like Adam or SGD refine learning.

Epochs

The model passes through the entire dataset repeatedly.

Training requires:

GPUs or TPUs

large datasets

careful tuning

regularization to avoid overfitting

TABLE 2 — Deep Learning Training Pipeline

Training Step | What Happens | Purpose |

|---|---|---|

Forward Pass | Model predicts output | Understands input patterns |

Loss Function | Measures error | Guides improvement |

Backpropagation | Adjusts weights | Reduces error |

Optimizer Step | Updates parameters | Boosts accuracy |

Epochs | Multiple passes through data | Ensures convergence |

8. Real-World Applications of Deep Learning

Deep learning powers critical AI systems across industries.

Computer Vision

Self-driving cars

Medical imaging

Facial recognition

Object detection

Industrial inspection

Natural Language Processing (NLP)

LLMs (GPT, Claude, Gemini)

Chatbots

Document understanding

Translation

Speech Processing

Voice assistants

Transcription

Emotion analysis

Finance

Fraud detection

Credit scoring

Algorithmic trading

Retail & E-commerce

Recommendation systems

Inventory forecasting

Dynamic pricing

Healthcare

Diagnostics

Risk prediction

Drug discovery

Deep learning is the engine behind modern automation, analysis, and intelligence.

9. Challenges and Limitations of Deep Learning

Even with its power, deep learning faces several limitations.

Data Requirements

Large, high-quality datasets are essential.

Compute Costs

Training frontier models can cost millions.

Explainability

Models resemble “black boxes.”

Bias

Models can reflect biases in training data.

Energy Consumption

Large models require significant resources.

Overfitting

Models may memorize instead of generalizing.

These challenges drive ongoing research into efficient architectures and better training methods.

10. The Future of Deep Learning

Deep learning is rapidly evolving. Key trends include:

Efficient Architectures

State-space models (SSMs) like Mamba and hybrid transformer-SSM models.

Mixture-of-Experts (MoE)

Cheaper inference by activating only parts of the model.

Multimodal AI

Understanding text, images, audio, video, and structured data together.

Self-Supervised Learning

Learning from unlabeled data at massive scale.

AI Agents

Deep learning models that not only answer — but take action.

Smaller Specialized Models

Industry-specific deep learning systems.

Deep learning will remain the core of AI innovation for years to come.

Glossary

Deep Learning:

Subset of ML based on multi-layer neural networks.

Neural Network:

Layered model that learns patterns from data.

Backpropagation:

Algorithm for adjusting model weights.

Epoch:

One full pass through the training dataset.

Transformer:

Modern deep learning architecture for language and multimodal tasks.

CNN:

Model used for image-related tasks.

Optimizer:

Algorithm that updates model parameters.

FAQ

Is deep learning the same as machine learning?

No — deep learning is a subset of ML using neural networks.

Does deep learning always need big data?

For best results, yes.

Are LLMs deep learning models?

Yes — they are transformer-based deep learning systems.

Is deep learning better than traditional ML?

For complex data like text, images, audio — absolutely.

Why did deep learning get popular?

Because GPUs + large datasets unlocked its full potential.

Want Daily AI News in Simple Language?

If you enjoy expert guides like this, subscribe to AI Business Weekly — the fastest-growing AI newsletter for business leaders.

👉 Subscribe to AI Business Weekly

https://aibusinessweekly.net