Last Updated: December 1, 2025

Key Takeaways

Generative AI creates new content—text, images, code, audio, and video—rather than just analyzing existing data

It powers ChatGPT, DALL-E, Midjourney, GitHub Copilot, and hundreds of other modern AI applications

The technology uses foundation models like transformers and diffusion models trained on massive datasets

The generative AI market reached 44 billion dollars in 2024 and is projected to exceed 200 billion dollars by 2030

Organizations across all industries are deploying generative AI for content creation, customer service, software development, and decision support

Generative AI refers to artificial intelligence systems that create new content across multiple formats including text, images, code, audio, and video. Unlike traditional AI that analyzes and categorizes existing data, generative AI produces original outputs based on patterns learned from training data, enabling applications from conversational chatbots to realistic image generation to automated code writing.

The technology represents a fundamental shift in AI capabilities. Previous AI systems excelled at recognition and classification tasks like identifying objects in photos or detecting spam emails. Generative AI goes further by synthesizing entirely new content that didn't exist before, often indistinguishable from human-created work.

Table of Contents

How Generative AI Works

Generative AI operates through sophisticated neural networks trained on enormous datasets to recognize patterns and relationships in content. The process involves three fundamental stages: training, inference, and generation.

Training begins with foundation models learning from massive datasets containing billions of examples. For language models like GPT-4 or Claude, training involves processing trillions of words from books, websites, and documents. Image models like DALL-E or Midjourney train on hundreds of millions of images paired with text descriptions. During training, models learn statistical patterns, relationships between concepts, styles and structures, and how different elements combine to create coherent content.

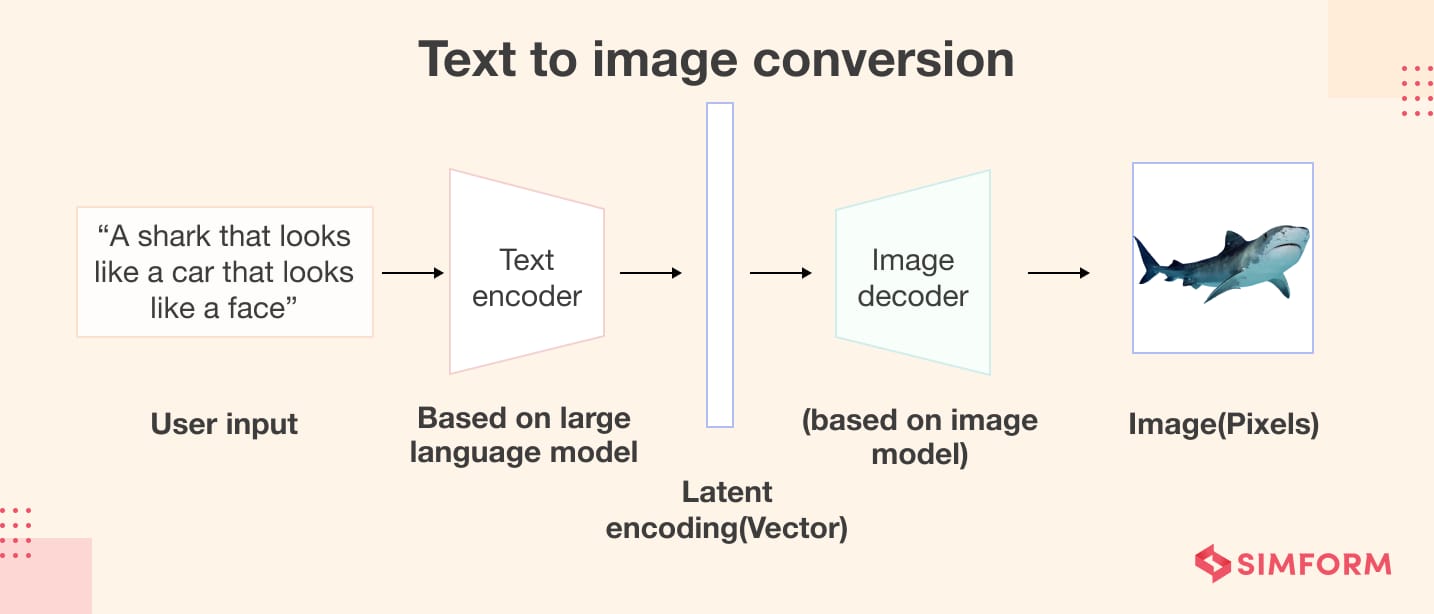

The architecture varies by content type. Transformer models power most text generation through attention mechanisms that weigh relationships between words across long sequences. These models predict the next most likely token based on context, enabling coherent text generation. Diffusion models dominate image generation by learning to remove noise from images iteratively, essentially reversing a corruption process to generate new visuals from random noise. Generative Adversarial Networks (GANs) use two competing networks—a generator creating content and a discriminator evaluating authenticity—pushing each other toward better results.

Inference occurs when users provide prompts or inputs to trained models. The model processes the request, applies learned patterns, and generates responses token by token for text or pixel by pixel for images. Advanced models incorporate techniques like temperature control for creativity versus consistency, top-k sampling to limit response variability, and beam search to explore multiple generation paths.

The generation quality depends heavily on training data quality and quantity, model architecture and parameter count, computational resources during both training and inference, and prompt engineering techniques used during interaction. Modern large language models contain hundreds of billions of parameters, requiring millions of dollars in compute resources to train but enabling remarkably human-like output generation.

Types of Generative AI Models

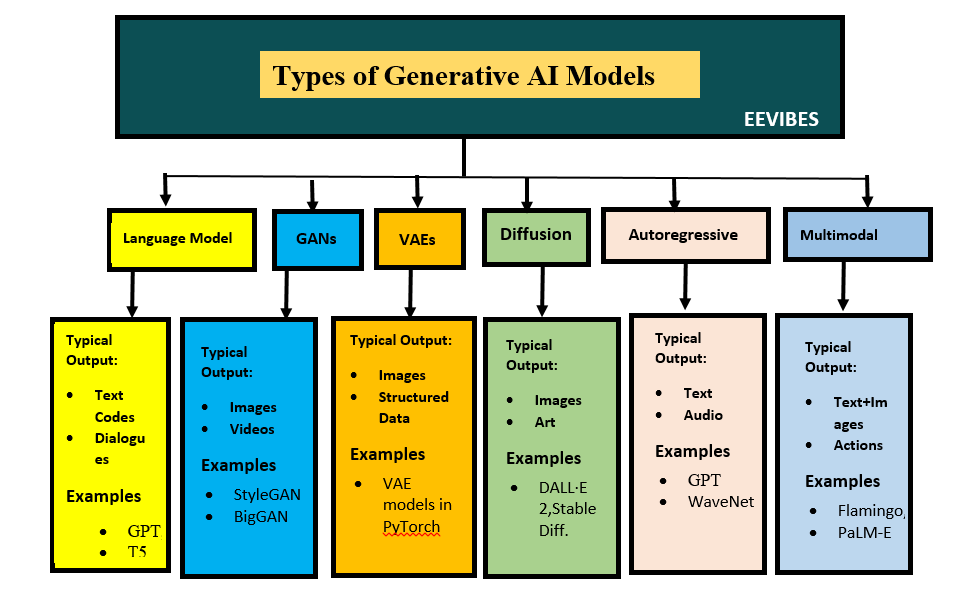

Generative AI encompasses several distinct model architectures, each optimized for different content types and use cases. Understanding these categories helps organizations select appropriate technologies for their needs.

Large Language Models represent the most visible generative AI category, producing human-like text for conversations, content creation, and reasoning tasks. GPT-4, Claude, Gemini, and LLaMA exemplify this category. These models excel at writing articles and emails, answering questions conversationally, generating and explaining code, summarizing documents, and translating between languages. They process text as sequences of tokens, predicting subsequent tokens based on context and learned patterns from training data.

Text-to-Image Models generate visual content from natural language descriptions, enabling users without artistic skills to create professional-quality images. DALL-E 3, Midjourney, Stable Diffusion, and Adobe Firefly lead this space. Applications include marketing asset creation, product visualization, concept art and design exploration, and personalized content generation. These models typically use diffusion or autoregressive techniques to progressively refine images from noise into coherent visuals matching text prompts.

Code Generation Models assist software developers by producing, completing, and debugging code based on natural language descriptions or existing code context. GitHub Copilot, Amazon CodeWhisperer, and Replit Ghostwriter demonstrate this category's impact. Developers use these tools for function and class generation from descriptions, code completion and suggestion, bug identification and fixing recommendations, and documentation generation. These models train on billions of lines of public code repositories, learning programming patterns, syntax, and best practices.

Audio and Music Generation Models create original sound content including speech, music, and sound effects. ElevenLabs for voice synthesis, MusicLM for music generation, and AudioCraft exemplify this category. Use cases span voiceover creation for videos and podcasts, personalized audio content, background music composition, and accessibility features like text-to-speech. These models learn acoustic patterns, musical structures, and voice characteristics from extensive audio datasets.

Video Generation Models produce moving visual content from text descriptions or images, representing the newest frontier in generative AI. Runway Gen-2, Pika Labs, and emerging capabilities from major AI labs demonstrate growing sophistication. Applications include marketing video creation, training content development, personalized video messages, and creative filmmaking tools. These models face significant computational challenges, as video generation requires maintaining consistency across frames while ensuring temporal coherence.

Multimodal Models process and generate across multiple content types simultaneously, understanding and creating combinations of text, images, audio, and video. GPT-4V, Gemini, and Claude with vision capabilities illustrate this convergence. These models enable comprehensive content analysis, cross-format content creation, richer interactive experiences, and more natural human-AI interaction.

TABLE 1: Generative AI Model Types and Capabilities

Model Type | Example Tools | Primary Outputs | Key Applications | Training Data Size |

|---|---|---|---|---|

Language Models | GPT-4, Claude, Gemini | Text, code | Content creation, chatbots, analysis | Trillions of tokens |

Image Generation | DALL-E, Midjourney, Stable Diffusion | Images, art | Marketing, design, visualization | Hundreds of millions of images |

Code Generation | Copilot, CodeWhisperer | Code, documentation | Software development, debugging | Billions of lines of code |

Audio/Music | ElevenLabs, MusicLM | Voice, music, sound | Voiceovers, soundtracks, accessibility | Millions of hours of audio |

Video Generation | Runway, Pika Labs | Video clips | Marketing, training, entertainment | Millions of video hours |

Generative AI vs Traditional AI

Generative AI and traditional AI serve fundamentally different purposes, employ distinct techniques, and deliver different types of value. Understanding these differences clarifies when to apply each approach.

Traditional AI, also called discriminative or analytical AI, focuses on analyzing and categorizing existing data to make predictions or decisions. These systems identify spam emails, recommend products based on purchase history, detect fraudulent transactions, diagnose diseases from medical images, and optimize supply chain routes. They excel at pattern recognition, classification, and prediction tasks where the goal involves understanding and acting on existing information.

Generative AI creates entirely new content that didn't exist before. Rather than analyzing a photograph, it generates original images. Instead of identifying spam, it writes email responses. Rather than predicting customer churn, it generates personalized marketing copy. The fundamental shift moves from recognition to creation.

The technical approaches differ significantly. Traditional AI typically uses decision trees, support vector machines, random forests, convolutional neural networks for image classification, and recurrent neural networks for sequence prediction. These models learn to map inputs to predefined output categories or numeric predictions. Generative AI employs transformer architectures for language, diffusion models for images, GANs for various content types, and variational autoencoders for data generation. These models learn probability distributions of training data, enabling sampling of new examples.

Training objectives diverge substantially. Traditional AI minimizes prediction errors, learning to correctly classify or predict based on labeled examples. Generative AI maximizes the likelihood of generating realistic new examples, learning the underlying structure and patterns of data itself. This distinction means generative models require different evaluation metrics—generated content quality, diversity, and coherence matter more than classification accuracy.

The business applications reveal complementary strengths. Traditional AI remains superior for tasks requiring precise predictions with measurable accuracy, analyzing large datasets to extract insights, automating rule-based decision-making, and identifying patterns or anomalies in existing data. Generative AI excels at creating marketing content and creative assets, providing conversational interfaces and customer service, generating code and technical documentation, and producing personalized content at scale.

Many modern AI systems combine both approaches. A customer service platform might use traditional AI to classify inquiry types and route requests while employing generative AI to craft personalized responses. An e-commerce system might use traditional AI for product recommendations and generative AI to create personalized product descriptions.

Real-World Applications

Generative AI delivers measurable business value across industries through applications that automate creative work, enhance customer experiences, and accelerate decision-making.

Content Creation and Marketing teams use generative AI to produce blog posts, social media content, email campaigns, and ad copy at unprecedented scale. Companies report 60 to 70 percent time savings on content development while maintaining brand voice through carefully engineered prompts. Jasper AI generates over 70 million pieces of content monthly for marketing teams. Visual content creation tools like Midjourney and DALL-E enable businesses to produce custom images without expensive photoshoots or graphic designers.

Customer Service and Support implementations leverage conversational AI to handle inquiries 24/7 across channels. Generative AI chatbots understand context, provide personalized responses, and resolve complex issues that defeated previous rule-based systems. Shopify reports its generative AI support assistant resolves 70 percent of customer inquiries autonomously with satisfaction scores matching human agents. Financial services firms deploy generative AI to explain complex products, guide customers through applications, and provide personalized financial advice.

Software Development productivity has transformed through AI-powered coding assistants. GitHub Copilot, which reached 300 million dollars in annual revenue, helps developers write code faster by generating functions from comments, completing code automatically, suggesting bug fixes and optimizations, and creating test cases. Surveys show developers using generative AI coding tools complete tasks 30 to 55 percent faster while reporting higher job satisfaction.

Drug Discovery and Healthcare leverage generative AI to design new molecules, predict protein structures, generate synthetic medical data for research, and accelerate clinical trial design. Insilico Medicine used generative AI to discover a novel drug candidate in 18 months at a fraction of traditional costs. Healthcare documentation tools generate clinical notes from doctor-patient conversations, reducing administrative burdens by 60 to 70 percent and allowing physicians to focus on patient care.

Design and Creative Industries employ generative AI for product design iteration, architectural visualization, fashion design exploration, and game asset creation. Coca-Cola used generative AI in its "Create Real Magic" campaign, enabling consumers to create brand artwork. Architectural firms generate multiple design variations in hours rather than weeks, accelerating client review cycles.

Financial Services applications include generating investment research reports, creating personalized financial plans, producing regulatory compliance documentation, and synthesizing market analysis. Bloomberg integrated generative AI trained on financial data to provide analysts with natural language insights and automated report generation.

Education and Training deployments create personalized learning content, generate practice problems adapted to student level, provide instant feedback on assignments, and create interactive tutoring experiences. Khan Academy's Khanmigo uses generative AI to provide personalized tutoring at scale, adapting explanations to individual learning styles.

TABLE 2: Generative AI Business Impact by Industry

Industry | Primary Use Cases | Reported Efficiency Gains | Adoption Rate (2024) |

|---|---|---|---|

Marketing | Content creation, personalization | 60-70% time savings | 73% |

Customer Service | Chatbots, inquiry resolution | 70% autonomous resolution | 68% |

Software Development | Code generation, debugging | 30-55% productivity increase | 92% |

Healthcare | Clinical documentation, research | 60-70% documentation time saved | 45% |

Financial Services | Research, analysis, reporting | 40% faster report creation | 58% |

Design/Creative | Asset creation, iteration | 50% faster design cycles | 61% |

Education | Personalized content, tutoring | 3x content creation speed | 52% |

Legal | Document drafting, research | 50% faster document review | 41% |

Benefits and Limitations

Generative AI offers transformative advantages while presenting significant challenges that organizations must navigate carefully.

The benefits drive rapid adoption across industries. Unprecedented productivity gains emerge as AI generates in seconds what previously required hours or days of human effort. Content creators produce more output with consistent quality. Developers write code faster. Designers explore more concepts. Cost reduction follows naturally, as automated content generation requires less human labor while maintaining quality standards. Organizations report 40 to 70 percent cost savings on specific content creation workflows.

Accessibility democratizes creative capabilities, enabling people without specialized skills to produce professional-quality content. Non-designers create marketing images. Non-programmers build functional applications. Non-writers craft compelling copy. This democratization expands what small teams and individuals can accomplish. Personalization at scale becomes economically viable, as generative AI tailors content to individual users without prohibitive costs. Marketing messages, product recommendations, and customer service responses adapt to each person.

Innovation acceleration manifests through rapid prototyping and exploration. Designers test hundreds of concepts quickly. Researchers explore more hypotheses. Product teams iterate faster. The speed from idea to testable prototype compresses dramatically. 24/7 availability ensures AI systems never tire, maintaining consistent performance regardless of time, demand volume, or workload peaks.

However, critical limitations require careful management. Accuracy and hallucination concerns remain paramount. Generative AI confidently produces incorrect information, fabricated facts, and nonsensical outputs mixed with accurate content. High-stakes applications require human verification, fact-checking systems, and validation processes. Medical, legal, and financial applications demand particular caution.

Quality inconsistency challenges enterprise adoption. The same prompt may yield varying quality across runs. Maintaining brand voice, factual accuracy, and appropriate tone requires careful prompt engineering, output review, and iterative refinement. Organizations struggle to ensure consistent quality without extensive human oversight.

Bias and ethical concerns arise because models learn from training data reflecting societal biases. Generated content may perpetuate stereotypes, favor certain demographics, or produce culturally inappropriate outputs. Responsible deployment requires bias testing, diverse training data, and ongoing monitoring.

Intellectual property questions remain unresolved. Training data often includes copyrighted material, raising legal concerns about outputs. Questions about ownership of AI-generated content, liability for problematic outputs, and fair compensation for training data creators continue evolving through legislation and litigation.

Security vulnerabilities include prompt injection attacks that manipulate models into ignoring safety guidelines, data leakage where models reveal training data, and malicious use for generating misinformation, deepfakes, or harmful content. Robust security measures, access controls, and content filtering systems are essential.

Environmental costs deserve consideration. Training large generative AI models consumes enormous energy and water for cooling data centers. GPT-3 training produced an estimated 552 tons of carbon emissions. Organizations must balance AI benefits against environmental impact.

Successful implementations start with appropriate use cases where errors carry limited consequences, implement human-in-the-loop review processes, establish clear guidelines for acceptable outputs, continuously monitor for bias and quality issues, and maintain transparency about AI-generated content with users.

Frequently Asked Questions

What's the difference between generative AI and ChatGPT?

ChatGPT is a specific generative AI application developed by OpenAI. Generative AI is the broader category of AI systems that create new content. ChatGPT uses generative AI technology, specifically large language models, to have conversations and generate text. Other generative AI systems include DALL-E for images, Midjourney for art, and GitHub Copilot for code. Think of generative AI as the technology category and ChatGPT as one popular product using that technology.

How much does generative AI cost to implement?

Costs vary dramatically based on approach. Using existing platforms like ChatGPT, Claude, or Midjourney through APIs typically costs pennies per request, making small-scale implementation affordable for any business. Enterprise implementations with custom models, fine-tuning, and infrastructure can range from tens of thousands to millions of dollars. Most organizations start with API-based pilots costing a few hundred to few thousand dollars monthly before scaling or building custom solutions.

Can generative AI replace human workers?

Generative AI augments rather than replaces most human roles. It excels at automating repetitive content creation, accelerating workflows, and handling routine tasks. However, it requires human judgment for strategic decisions, creative direction, quality control, and ethical oversight. Jobs are transforming to incorporate AI assistance rather than disappearing. Workers who learn to leverage generative AI effectively increase their productivity and value significantly.

Is content created by generative AI copyrightable?

Copyright law regarding AI-generated content remains unsettled and varies by jurisdiction. In the United States, the Copyright Office currently holds that purely AI-generated content without human creative input cannot be copyrighted. Content with substantial human contribution using AI as a tool may qualify for protection. Businesses should consult legal counsel regarding their specific use cases and jurisdictions.

How do I ensure generative AI outputs are accurate?

Implement multiple verification layers including human review for high-stakes content, fact-checking against authoritative sources, consistency checks across multiple generation runs, confidence scoring when available from models, and domain expert validation for specialized content. Never blindly trust generative AI outputs for important decisions. Treat AI as a draft generator requiring human verification rather than a definitive source of truth.

What skills do employees need to work with generative AI?

Basic prompt engineering to craft effective inputs, critical thinking to evaluate output quality, domain expertise to verify accuracy and appropriateness, ethical judgment to identify problematic content, and basic technical literacy about AI capabilities and limitations. Most roles don't require programming or deep technical knowledge. Clear communication skills and willingness to experiment matter most.

How is generative AI regulated?

Regulation varies globally and evolves rapidly. The European Union's AI Act classifies high-risk AI applications requiring strict compliance. The United States takes a sector-specific approach through existing agencies. China implements strict content controls and registration requirements. Organizations should monitor regulatory developments in their jurisdictions and implement responsible AI governance frameworks regardless of legal requirements.

Key Terms Glossary

Foundation Model: A large AI model trained on broad data that can be adapted for multiple tasks, serving as the base for specialized applications like ChatGPT or DALL-E.

Transformer: A neural network architecture using attention mechanisms to process sequential data, forming the backbone of modern large language models.

Diffusion Model: A generative model that learns to remove noise from data iteratively, enabling high-quality image generation from random starting points.

Tokens: The basic units of text that language models process, roughly equivalent to three-quarters of a word, which determine context limits and processing costs.

Fine-Tuning: The process of adapting a pre-trained foundation model to specific tasks or domains by training on specialized datasets.

Prompt: The input text or instructions provided to generative AI models to guide their output generation.

Hallucination: When generative AI produces plausible-sounding but factually incorrect or fabricated information presented with confidence.

Parameters: The numerical weights within neural networks that determine model behavior, with more parameters generally enabling more sophisticated capabilities.

Inference: The process of using a trained model to generate outputs from new inputs, distinct from the training phase.

Temperature: A parameter controlling randomness in generation, where lower values produce more predictable outputs and higher values increase creativity and variation.

Multimodal: AI systems that can process and generate multiple types of content like text, images, and audio simultaneously.

Conclusion

Generative AI represents a paradigm shift in artificial intelligence, moving from systems that analyze existing data to those that create entirely new content. The technology powers applications from conversational chatbots to image generators to coding assistants, delivering measurable productivity gains and cost reductions across industries.

The rapid adoption trajectory reflects genuine business value. Organizations deploying generative AI report significant efficiency improvements in content creation, customer service, software development, and decision support. The market's growth from 44 billion dollars in 2024 toward projected 200 billion dollars by 2030 demonstrates sustained confidence in the technology's potential.

Success requires understanding both capabilities and limitations. While generative AI produces impressive outputs at scale, it demands human oversight for accuracy verification, quality control, and ethical governance. Organizations that combine AI's speed and scale with human judgment and creativity achieve the best results.

For businesses exploring generative AI, starting with well-defined use cases, implementing appropriate verification processes, training employees on effective utilization, and maintaining realistic expectations about capabilities establish foundations for success. The technology continues evolving rapidly, with improvements in accuracy, efficiency, and accessibility arriving regularly.

As generative AI becomes standard business infrastructure, those who develop expertise in deploying and managing these systems position themselves for competitive advantage in an AI-augmented economy.