Last Updated: December 1, 2025.

Professional working on laptop crafting AI prompts with visual representation of prompt engineering techniques and AI model interaction flowchart

Prompt engineering is the practice of designing and refining inputs to guide artificial intelligence models, particularly large language models, toward generating desired outputs. Rather than simply asking questions or issuing commands, prompt engineering involves strategically crafting instructions with appropriate context, structure, and examples to maximize the accuracy, relevance, and usefulness of AI-generated responses.

The discipline represents the critical bridge between human intent and machine understanding. As generative AI systems like ChatGPT, Claude, and Google Gemini become integral to business operations, the ability to effectively communicate with these models through well-engineered prompts determines whether organizations extract genuine value or struggle with inconsistent, irrelevant results.

Unlike traditional software that follows explicit programming instructions, large language models trained on vast datasets respond based on probabilistic patterns in their training data. This fundamental characteristic means that how you ask matters as much as what you ask. A vague prompt like "write about marketing" might produce generic content, while a precisely engineered prompt specifying audience, tone, length, and key points delivers targeted, actionable results.

By 2026, McKinsey estimates that prompt engineering capabilities will be fundamental skills across most knowledge worker roles, with organizations investing heavily in training employees to maximize their AI tool effectiveness. This reflects growing recognition that generative AI's business value depends not just on model capabilities but on human ability to harness those capabilities through skilled prompting.

Table of Contents

How Prompt Engineering Works

Prompt engineering operates through systematic approaches to structuring AI inputs that improve output quality, consistency, and alignment with intended goals. This process combines understanding of how language models function with practical techniques for shaping their responses.

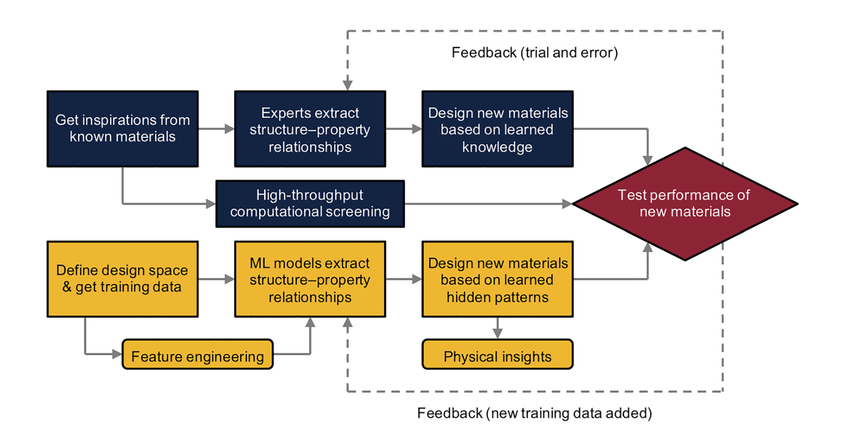

The foundation begins with understanding model behavior. Large language models generate responses by predicting the most likely next tokens based on patterns learned during training on billions of text examples. They lack true understanding or reasoning in the human sense but excel at pattern matching and continuation. This means models respond to statistical likelihoods shaped by their training data, making prompt structure and context critical factors in determining output quality.

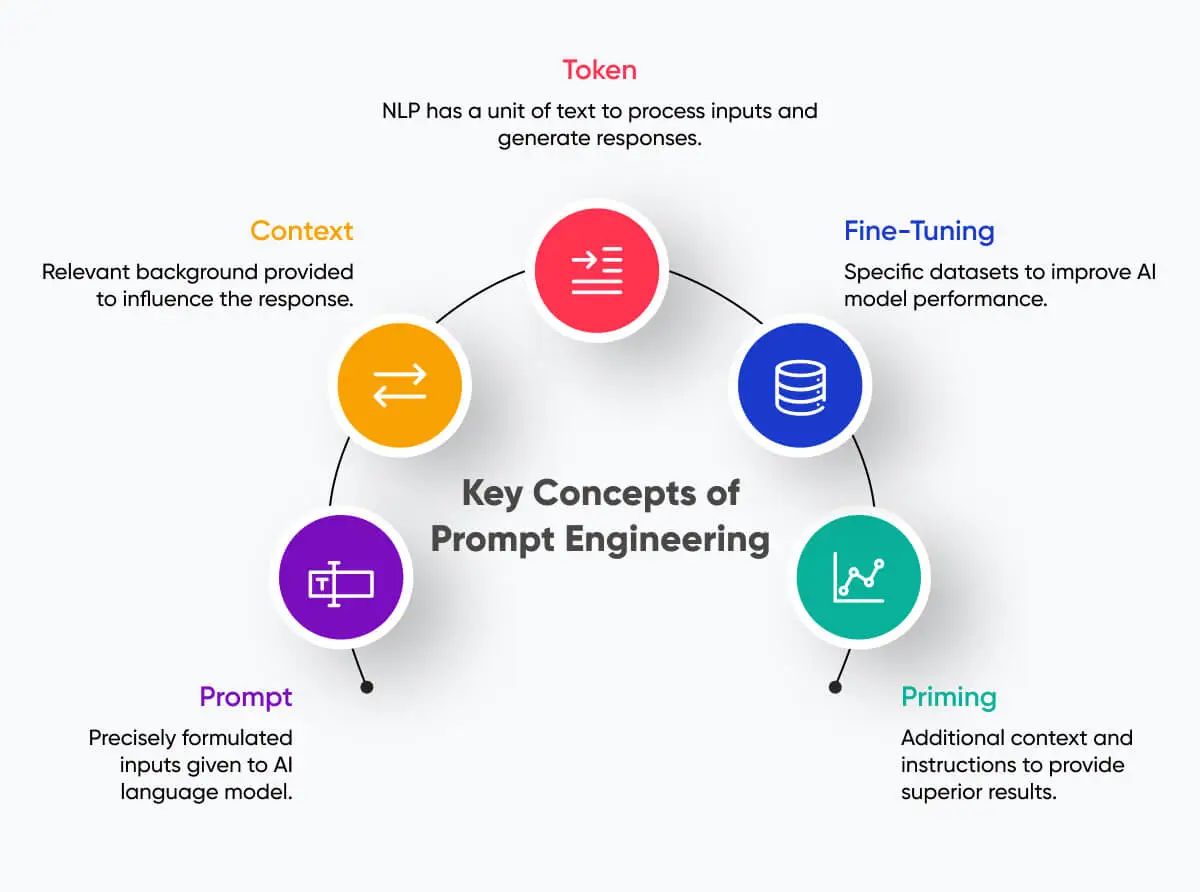

Effective prompts typically contain several key components. Clear instructions specify exactly what the model should do, using precise verbs like "summarize," "analyze," "compare," or "generate." Context provision gives the model necessary background information, including relevant facts, constraints, or situational details. Role assignment frames the model's perspective, such as "You are an experienced financial analyst" or "Act as a customer support specialist." Output specifications define desired format, length, tone, and structure. Examples demonstrate expected results through one or more sample inputs and outputs.

The engineering process involves iteration and refinement. Practitioners start with initial prompts, evaluate outputs against success criteria, identify where results fall short, adjust prompt elements systematically, and test variations to determine optimal formulations. This experimental approach treats prompting as an engineering discipline rather than casual conversation.

Advanced prompt engineering incorporates understanding of model limitations and behaviors. Models may struggle with very long contexts, losing track of early information. They sometimes hallucinate, generating plausible-sounding but incorrect information. They exhibit recency bias, weighting information near the end of prompts more heavily. They can be sensitive to phrasing, with small wording changes producing significantly different outputs. Skilled prompt engineers account for these characteristics when designing inputs.

The sophistication of prompts varies based on task complexity. Simple tasks might require only brief, clear instructions. Complex workflows demand multi-step prompts with explicit reasoning guidance, error-checking instructions, and output validation criteria. Enterprise applications often develop prompt libraries containing tested, optimized templates for common use cases, ensuring consistency across teams and reducing the need for individual employees to engineer prompts from scratch.

Core Prompt Engineering Techniques

Prompt engineering has evolved a repertoire of proven techniques that consistently improve AI model performance across diverse applications. Understanding and applying these methods enables practitioners to extract maximum value from language models.

Zero-shot prompting provides instructions without examples, relying on the model's pre-trained knowledge to complete tasks. This approach works best for straightforward tasks the model encountered frequently during training, such as basic summarization, simple classification, or general knowledge questions. A zero-shot prompt might be "Translate this text to Spanish" followed by the text, with no translation examples provided.

Few-shot prompting includes one or more examples demonstrating desired input-output patterns before presenting the actual task. This technique significantly improves performance on specialized tasks, unusual formats, or domain-specific applications. For instance, providing three examples of customer reviews classified as positive, negative, or neutral trains the model to classify subsequent reviews consistently. Research shows few-shot prompting can improve accuracy by 30 to 50 percent compared to zero-shot approaches for complex classification tasks.

Chain-of-thought prompting instructs models to show their reasoning process step-by-step before reaching conclusions. By adding phrases like "Let's think through this step by step" or providing examples that include reasoning chains, this technique dramatically improves performance on logic problems, mathematical calculations, and complex analytical tasks. Studies demonstrate chain-of-thought prompting enables models to solve problems they fail entirely with standard prompting.

Role prompting assigns the model a specific persona, expertise, or perspective that shapes response style and content. Instructing a model to respond "as an expert tax accountant" or "as a kindergarten teacher" influences vocabulary, depth, tone, and framing. This technique proves particularly valuable for domain-specific applications where specialized knowledge and communication styles matter.

Constraint-based prompting explicitly defines boundaries, limitations, and requirements for outputs. Specifying word count limits, prohibited topics, required information elements, formatting requirements, and audience appropriateness ensures outputs meet precise specifications. Enterprise applications rely heavily on constraints to maintain brand voice, regulatory compliance, and output consistency.

Prompt chaining breaks complex tasks into sequential steps, where each prompt builds on previous outputs. Rather than asking a model to complete an entire research report in one prompt, chaining might first request outline generation, then section-by-section content creation, followed by integration and refinement. This approach improves quality for multifaceted projects while making the process more transparent and controllable.

ReAct prompting combines reasoning and action, instructing models to alternate between thinking about problems and taking actions like searching databases or calling APIs. This technique enables models to gather information dynamically, verify facts, and perform multi-step processes that require external data access.

Self-consistency prompting generates multiple responses to the same prompt, then identifies the most common or consistent answer. For tasks with definitive correct answers, this majority-voting approach reduces errors from individual response variability. Applications include mathematical problem-solving, factual question answering, and decision-making scenarios.

TABLE 1: Core Prompt Engineering Techniques Comparison

Technique | Best Use Cases | Complexity | Typical Improvement |

|---|---|---|---|

Zero-Shot | Simple, common tasks | Low | Baseline |

Few-Shot | Specialized formats, domain tasks | Medium | 30-50% accuracy gain |

Chain-of-Thought | Logic, math, complex reasoning | Medium | 50-100% on complex problems |

Role Prompting | Domain expertise, tone control | Low | Varies by application |

Constraint-Based | Compliance, formatting, boundaries | Low | Ensures specifications met |

Prompt Chaining | Multi-step workflows | High | Significant for complex tasks |

ReAct | Information gathering, actions | High | Essential for dynamic tasks |

Self-Consistency | High-stakes accuracy needs | Medium | 10-30% error reduction |

Prompt Engineering vs Traditional Programming

Prompt engineering and traditional software programming both aim to direct computer behavior toward desired outcomes, but they differ fundamentally in approach, skills required, and operational characteristics. Understanding these distinctions helps organizations determine appropriate applications for each methodology.

Traditional programming involves writing explicit instructions in formal programming languages that computers execute deterministically. Programmers define precise logic flows, data structures, and algorithms that produce identical outputs given identical inputs. The relationship between code and behavior is direct and predictable. If a program calculates sales tax, it follows exact mathematical formulas every time without variation.

Prompt engineering works with probabilistic systems that generate responses based on learned patterns rather than explicit programming. The same prompt may produce different outputs across multiple runs, though well-engineered prompts increase consistency. Rather than defining exact logic, prompt engineers shape model behavior through natural language instructions, examples, and context. The relationship between input and output involves interpretation and pattern matching rather than deterministic execution.

The skill sets differ significantly. Traditional programmers must master programming languages, algorithms, data structures, software architecture patterns, and debugging methodologies. They think in terms of variables, functions, loops, and conditional logic. Prompt engineers need understanding of language model capabilities and limitations, natural language communication skills, iterative experimentation mindset, domain knowledge for their application area, and analytical skills to evaluate output quality. Many effective prompt engineers come from non-technical backgrounds including writing, teaching, research, and domain expertise.

Development cycles follow different patterns. Traditional programming typically involves design and planning phases, coding implementation, compilation and debugging, testing against specifications, and deployment to production environments. Prompt engineering cycles through rapid experimentation with prompt variations, evaluation of outputs against success criteria, iterative refinement based on results, and documentation of effective patterns. The feedback loop is much faster, often measuring in minutes rather than days or weeks.

Error handling approaches diverge substantially. Traditional programs fail explicitly with error messages when they encounter unexpected conditions. Programmers implement try-catch blocks, input validation, and exception handling to manage errors predictably. Language models rarely fail explicitly but may produce incorrect, irrelevant, or hallucinated outputs without indication that something went wrong. Prompt engineers must implement output validation, consistency checks, and human review processes to catch errors.

Maintenance and updates reflect different challenges. Traditional code requires explicit modification to change behavior, but changes are precise and predictable. Updating a tax calculation means modifying specific code lines with full control over the change. Prompt engineering adjustments may have unexpected side effects, as changes intended to improve one aspect of outputs might degrade others. However, prompt updates don't require code deployment, version control complexity, or technical release processes.

From a business perspective, traditional programming excels at tasks requiring exact specifications, deterministic behavior, complex logic and calculations, security-critical operations, and long-term system stability. Prompt engineering shines for natural language understanding and generation, creative content creation, flexible interpretation of ambiguous inputs, rapid prototyping and iteration, and tasks requiring human-like reasoning patterns.

Many modern applications combine both approaches. Traditional code handles data processing, business logic, security, and system integration, while prompt engineering enables natural language interfaces, content generation, and intelligent interpretation. A customer service application might use traditional programming for database queries and authentication while leveraging prompt engineering for understanding customer intent and generating personalized responses.

Real-World Prompt Engineering Applications

Prompt engineering drives practical value across industries through applications that transform how organizations operate, make decisions, and serve customers. These implementations demonstrate the technology's versatility and impact beyond theoretical potential.

Content Creation and Marketing represents one of the most widespread applications. Marketing teams use engineered prompts to generate blog posts, social media content, email campaigns, and product descriptions at scale while maintaining brand voice consistency. Companies like Jasper and Copy.ai have built entire platforms around prompt engineering templates for marketing content. Organizations report 60 to 70 percent time savings on content creation tasks while maintaining quality standards through carefully engineered prompts that specify tone, length, key messages, and audience targeting.

Customer Service and Support leverages prompt engineering to power AI chatbots and virtual assistants that handle inquiries with human-like understanding. Rather than rigid decision trees, modern support systems use prompts that instruct models to interpret customer intent, access relevant knowledge bases, provide empathetic responses, and escalate appropriately. Shopify reports that prompt-engineered customer service agents resolve 70 percent of inquiries without human intervention, with customer satisfaction scores matching or exceeding human agent performance for routine questions.

Software Development has emerged as a high-impact use case, with developers using prompt engineering to generate code, debug errors, write documentation, and create test cases. GitHub Copilot, which reached 300 million dollars in annual revenue, relies heavily on effective prompt engineering by users to generate useful code suggestions. Developers who master prompting techniques report productivity improvements of 30 to 55 percent compared to those using basic prompts. Effective prompts specify programming language, frameworks, input-output requirements, edge cases to handle, and code style preferences.

Legal and Compliance applications use prompt engineering to review contracts, identify regulatory requirements, summarize case law, and draft standard legal documents. Law firms and corporate legal departments employ prompts that instruct models to extract specific clauses, flag non-standard terms, compare contracts against templates, and identify potential risks. These applications require sophisticated prompts that define legal terminology precisely, specify jurisdictions, and maintain appropriate caution in language since legal errors carry significant consequences.

Healthcare and Medical Documentation benefits from prompts engineered to generate clinical notes from visit transcriptions, suggest diagnostic considerations based on symptoms, extract relevant information from medical literature, and create patient-friendly explanations of medical concepts. Ambient clinical intelligence platforms use prompt engineering to transform doctor-patient conversations into structured medical records, reducing documentation time by 60 to 70 percent. Medical prompt engineering requires extreme attention to accuracy, appropriate hedging of suggestions, and compliance with privacy regulations.

Data Analysis and Business Intelligence employs prompt engineering to interpret datasets, generate insights, create visualizations, and produce executive summaries. Analysts use prompts that instruct models to identify trends, correlations, and anomalies in data, then explain findings in business terms. Financial services firms use engineered prompts to analyze market data, generate investment research reports, and summarize earnings calls. These applications benefit from prompts that specify analytical frameworks, required metrics, and presentation formats aligned with business decision-making processes.

Education and Training leverages prompt engineering to create personalized learning experiences, generate practice problems, provide explanations at appropriate complexity levels, and offer feedback on student work. Educational technology platforms use prompts engineered to adapt content difficulty, explain concepts multiple ways, and identify knowledge gaps. Teachers report that well-engineered prompts for creating differentiated instruction materials save 5 to 10 hours weekly while improving content quality and personalization.

Human Resources and Recruiting applications include resume screening, interview question generation, job description creation, and candidate communication. HR teams use prompts that define required qualifications, company culture fit indicators, and appropriate communication tone. Recruiting platforms employ prompt engineering to match candidates with positions, generate personalized outreach messages, and summarize candidate profiles for hiring managers.

TABLE 2: Prompt Engineering Impact Across Industries

Industry | Primary Application | Key Metrics | Implementation Complexity |

|---|---|---|---|

Marketing | Content generation, personalization | 60-70% time savings | Medium |

Customer Service | Virtual agents, inquiry resolution | 70% autonomous resolution | Medium |

Software Development | Code generation, debugging | 30-55% productivity gain | Low-Medium |

Legal | Contract review, document drafting | 50% faster document review | High |

Healthcare | Clinical documentation, research | 60-70% documentation time saved | High |

Financial Services | Analysis, report generation | 40% faster report creation | Medium-High |

Education | Personalized learning, content creation | 5-10 hours saved weekly | Low-Medium |

Human Resources | Recruiting, screening, communications | 3x more candidates reviewed | Medium |

Benefits and Limitations

Prompt engineering delivers substantial advantages that explain its rapid adoption, but practitioners must understand and navigate inherent limitations to deploy the technology effectively.

The benefits are compelling across multiple dimensions. Accessibility democratizes AI capabilities, enabling non-technical users to harness advanced language models without programming expertise. Subject matter experts can directly create AI-powered tools for their domains rather than waiting for software development cycles. This accessibility reduces barriers between domain knowledge and AI implementation.

Speed and iteration velocity represent significant advantages. Traditional software development measures progress in weeks or months, while prompt engineering enables same-day prototyping and refinement. Organizations can test ideas, gather feedback, and iterate rapidly without deployment overhead. Marketing teams can develop and test dozens of content variations in hours rather than commissioning lengthy creative processes.

Cost efficiency stems from reduced development resources and faster time to value. Building custom software applications requires expensive engineering teams and months of development. Prompt engineering projects often succeed with smaller teams and shorter timelines. Companies report 50 to 80 percent cost reductions for certain applications compared to traditional software development approaches.

Flexibility and adaptability shine when requirements change or applications need customization. Modifying a prompt takes minutes compared to code changes requiring development, testing, and deployment. Organizations can rapidly adjust AI behavior for new products, market conditions, or customer feedback. Seasonal businesses modify prompts for holiday campaigns without technical releases.

Natural language interaction aligns with human communication patterns, reducing training burdens and cognitive load. Employees craft prompts using familiar language rather than learning programming syntax. This natural interface accelerates adoption and enables broader organizational participation in AI initiatives.

However, important limitations constrain prompt engineering applications. Consistency challenges arise from the probabilistic nature of language models. The same prompt may produce varying outputs across runs, creating reliability concerns for applications requiring exact specifications. Financial calculations, legal determinations, and regulated processes need deterministic behavior that prompt engineering struggles to guarantee absolutely.

Hallucination risks remain persistent, as models confidently generate plausible but incorrect information. Even well-engineered prompts cannot eliminate this behavior entirely. Applications requiring factual accuracy need robust validation mechanisms, human oversight, or hybrid approaches combining prompt engineering with traditional fact-checking systems.

Context limitations constrain how much information models can consider. Most language models have maximum context windows of 32,000 to 200,000 tokens, limiting their ability to process very large documents, extensive conversation histories, or comprehensive datasets in single interactions. Complex applications requiring synthesis of massive information volumes may need architectural solutions beyond pure prompt engineering.

Transparency and explainability challenges emerge because models don't provide clear reasoning for specific outputs. When a model generates particular content or makes certain decisions, tracing exactly why proves difficult. Regulated industries and high-stakes applications often require explainable decision-making that prompt engineering alone cannot fully provide.

Security and prompt injection vulnerabilities create risks where malicious users craft inputs that override intended behavior. Models might be manipulated to ignore safety guidelines, reveal confidential information, or produce harmful content. Robust prompt engineering must implement defensive measures, input validation, and output filtering to mitigate these risks.

Dependency on model capabilities means prompt engineering effectiveness varies across different models and changes as models update. Prompts optimized for one model may perform differently on another. Model updates can unexpectedly alter behavior, requiring prompt re-engineering. Organizations must account for this variability in long-term planning.

Organizations successfully deploying prompt engineering typically start with lower-stakes applications where output variability is acceptable, implement validation and review processes appropriate to risk levels, combine prompt engineering with traditional programming for hybrid solutions, continuously monitor and refine prompts based on performance data, and maintain realistic expectations about capabilities and limitations.

Career Opportunities in Prompt Engineering

Prompt engineering has rapidly evolved from an experimental practice to a formal career path with growing demand across industries. Organizations recognize that maximizing AI value requires skilled practitioners who can bridge human intent and machine capability.

The job market shows dramatic growth. LinkedIn reported 3,000 percent increases in prompt engineering job postings between 2022 and 2024. Roles range from individual contributors optimizing specific applications to strategic positions designing enterprise-wide prompt architectures. Salary ranges vary widely based on experience and organization, with entry-level positions starting around 60,000 to 80,000 dollars, mid-level roles commanding 90,000 to 140,000 dollars, and senior prompt engineers at major technology companies earning 150,000 to 250,000 dollars or more.

Role types span several categories. Prompt Engineers focus on designing, testing, and optimizing prompts for specific applications, collaborating with product teams to understand requirements, building prompt libraries and templates, and measuring performance improvements. AI Product Managers incorporate prompt engineering into product strategy, define use cases and success metrics, coordinate between technical and business stakeholders, and guide AI feature development roadmaps. Conversational AI Designers specialize in chatbot and virtual assistant experiences, crafting dialogue flows and response patterns, ensuring natural conversational experiences, and optimizing for user satisfaction. Domain-Specific Prompt Specialists combine industry expertise with prompt engineering skills, working in legal, medical, financial, or technical domains where specialized knowledge proves critical.

Required skills blend technical understanding with communication abilities. Core competencies include natural language proficiency with strong writing and communication skills, analytical thinking to evaluate output quality systematically, understanding of language model capabilities and limitations, experimentation mindset with comfort testing multiple approaches, domain knowledge in target application areas, and basic technical literacy about AI concepts even without deep programming expertise.

Advanced practitioners develop additional capabilities including programming skills for integrating prompts with applications, data analysis abilities to measure performance quantitatively, knowledge of prompt engineering frameworks and libraries, understanding of AI safety and ethics considerations, and project management skills for enterprise implementations.

Educational pathways vary considerably. Unlike traditional software engineering with established computer science degree requirements, prompt engineering draws talent from diverse backgrounds. Many successful prompt engineers studied linguistics, English, communications, technical writing, domain expertise fields like law or medicine, or psychology and cognitive science. Formal education programs are emerging, with universities and online platforms offering prompt engineering courses and certifications, though the field remains young enough that practical experience often matters more than credentials.

Professional development resources include online courses from platforms like Coursera, edX, and Udacity, community resources and forums where practitioners share techniques, documentation from AI providers like OpenAI, Anthropic, and Google, industry conferences focused on generative AI applications, and internal company training programs as organizations build prompt engineering capabilities.

Career progression paths typically start with junior prompt engineers working on defined applications with supervision, advance to mid-level roles independently managing prompt engineering projects, and progress to senior positions architecting enterprise prompt strategies and mentoring teams. Some practitioners transition into adjacent roles including AI product management, technical writing and documentation, customer success for AI platforms, or consulting helping organizations implement prompt engineering practices.

The field's future trajectory remains dynamic. As language models improve, prompt engineering techniques will evolve. Some predict the discipline will become less critical if models develop abilities to interpret intent from simpler prompts. Others argue prompt engineering will grow more sophisticated, becoming analogous to traditional software engineering as AI systems tackle increasingly complex tasks. Most likely, prompt engineering will remain valuable but evolve in focus, shifting from basic prompt crafting toward strategic design of AI-powered workflows and experiences.

Organizations building prompt engineering capabilities typically establish centers of excellence that develop best practices, create reusable prompt libraries for common use cases, provide training and certification programs, maintain quality standards and governance frameworks, and foster communities of practice for knowledge sharing. This organizational approach scales prompt engineering effectiveness beyond individual practitioners.

Frequently Asked Questions

Do I need programming skills for prompt engineering?

No programming expertise is required for basic to intermediate prompt engineering. The practice relies on natural language communication and analytical thinking rather than coding. However, advanced applications that integrate prompts with software systems, automate prompt workflows, or implement complex validation logic do benefit from programming knowledge. Many successful prompt engineers come from non-technical backgrounds including writing, teaching, and domain expertise fields.

How long does it take to learn prompt engineering?

Basic prompt engineering skills can be developed in days or weeks through practice and experimentation. Crafting clear instructions, providing examples, and iteratively refining prompts are intuitive processes that improve quickly with hands-on experience. Advanced techniques and domain-specific applications require months of focused learning and practice. Professional-level expertise that enables architectural design and strategic implementation typically develops over 6 to 18 months of consistent work.

Which AI models work best with prompt engineering?

Modern large language models including GPT-4, Claude, and Google Gemini all respond well to prompt engineering techniques. Different models exhibit varying strengths, with some excelling at creative tasks, others at analytical reasoning, and some optimized for coding. The best model depends on your specific application. Most prompt engineering skills transfer across models, though some optimization may be needed when switching between platforms.

Can prompt engineering replace traditional software development?

Prompt engineering complements rather than replaces traditional programming. It excels at natural language tasks, flexible interpretation, rapid prototyping, and creative applications. Traditional programming remains essential for deterministic logic, security-critical operations, complex calculations, system integration, and applications requiring guaranteed behavior. Most sophisticated applications combine both approaches, using traditional code for structure and reliability while leveraging prompt engineering for intelligent, adaptive capabilities.

How do I measure prompt engineering effectiveness?

Effectiveness metrics depend on your application. Common measurements include output accuracy compared to ground truth, user satisfaction scores for customer-facing applications, task completion rates, time savings compared to manual processes, cost per task or interaction, consistency across multiple runs of the same prompt, and relevance ratings from subject matter experts. Successful implementations establish clear success criteria before development and continuously measure performance against those benchmarks.

What industries use prompt engineering most?

Technology companies pioneered prompt engineering but adoption has spread broadly. Current high-utilization industries include marketing and content creation, customer service and support, software development, financial services, legal services, healthcare administration, education and training, and human resources. Essentially any knowledge work involving language understanding, content generation, or decision support from unstructured information can benefit from prompt engineering.

Are there risks with prompt engineering?

Yes, several risks require careful management. Models may hallucinate incorrect information, potentially producing confident but wrong outputs. Prompt injection attacks can manipulate models to ignore safety guidelines or reveal confidential information. Bias in training data can propagate through generated outputs. Inconsistent responses may create user confusion or operational problems. Organizations should implement validation processes, security measures, bias monitoring, human oversight for high-stakes decisions, and clear user communication about AI-generated content.

What's the future of prompt engineering?

Prompt engineering will likely evolve rather than disappear. As models improve at interpreting intent, basic prompting may become simpler. However, complex applications will require increasingly sophisticated prompt design, strategic architecture of multi-step AI workflows, integration with external data and tools, and governance frameworks ensuring quality and safety. The skill is transforming from tactical prompt crafting toward strategic design of AI-powered experiences, similar to how web development evolved from HTML coding to sophisticated user experience design.

Key Terms Glossary

Prompt: The input text provided to an AI language model to generate a response, including instructions, context, examples, and questions that guide the model's output.

Few-Shot Learning: A technique where prompts include several examples of desired input-output pairs, teaching the model the pattern or task through demonstration before presenting the actual query.

Chain-of-Thought: A prompting method that instructs models to show step-by-step reasoning before reaching conclusions, significantly improving performance on complex logical and mathematical tasks.

Zero-Shot Prompting: Providing task instructions without examples, relying entirely on the model's pre-trained knowledge to understand and complete the request.

Hallucination: When AI models generate plausible-sounding but factually incorrect or fabricated information, presenting it with confidence as if it were true.

Token: The basic unit of text that language models process, roughly equivalent to three-quarters of a word in English, which determines context window limits and processing costs.

Context Window: The maximum amount of text a language model can consider at once, measured in tokens, which constrains how much information can be included in prompts and conversations.

Prompt Injection: A security vulnerability where malicious inputs override a prompt's intended instructions, potentially causing models to ignore safety guidelines or reveal confidential information.

System Prompt: Initial instructions provided to language models that establish behavior, tone, and constraints for an entire conversation or application, typically invisible to end users.

Temperature: A parameter controlling randomness in model outputs, where lower values produce more deterministic responses and higher values increase creativity and variation.

Role Prompting: Assigning the model a specific persona or expertise that shapes response style, depth, and perspective, such as instructing it to respond as a domain expert.

Prompt Chaining: Breaking complex tasks into sequential prompts where each step builds on previous outputs, enabling sophisticated multi-stage workflows.

Conclusion

Prompt engineering has emerged as a fundamental discipline for maximizing the value organizations extract from generative AI technologies. By bridging human intent and machine capability through strategically designed inputs, prompt engineering transforms language models from impressive research achievements into practical business tools delivering measurable results.

The practice encompasses proven techniques from few-shot learning and chain-of-thought reasoning to role prompting and constraint specification, each suited to different applications and complexity levels. Organizations across industries are deploying prompt engineering for content creation, customer service, software development, legal services, healthcare documentation, and data analysis, consistently achieving substantial improvements in efficiency, quality, and scale.

As language models continue advancing in capability, prompt engineering evolves from tactical skill toward strategic competency. Success requires understanding model behaviors and limitations, systematic experimentation and refinement, appropriate risk management and validation, combination with traditional programming for robust solutions, and continuous learning as techniques and technologies progress.

For professionals, prompt engineering represents a valuable and increasingly essential skill. The barrier to entry remains low, with natural language communication and analytical thinking more critical than technical programming expertise. For organizations, investment in prompt engineering capabilities through training, best practice development, and center of excellence establishment pays dividends across AI initiatives.

The technology's trajectory suggests prompt engineering will remain central to AI implementation for the foreseeable future, though its focus may shift toward more sophisticated architectural and strategic concerns. Those who develop strong prompt engineering capabilities today position themselves and their organizations to thrive in an increasingly AI-augmented business landscape.