Last Updated: December 7, 2025

Key Takeaways

Artificial intelligence enables computers to perform tasks requiring human intelligence including learning, reasoning, problem-solving, and decision-making

AI powers everyday technologies from smartphone assistants and social media feeds to navigation apps and streaming recommendations

The AI market reached 184 billion dollars in 2024 and projects to exceed 800 billion dollars by 2030

Modern AI relies primarily on machine learning and deep learning rather than hand-coded rules

Generative AI tools like ChatGPT, Gemini, and Claude represent the latest breakthrough in AI capabilities

AI augments rather than replaces human work in most applications, creating new opportunities while transforming existing roles

Artificial intelligence has evolved from science fiction concept to everyday reality, powering the technologies billions of people use daily. From the voice assistant answering your questions to the algorithm recommending your next video, AI systems increasingly shape how we work, learn, communicate, and make decisions.

Understanding AI matters more in 2025 than ever before. The technology influences career opportunities, business strategies, educational approaches, and societal development. This guide explains what AI actually is, how it works, where it's used, and why it matters—in clear language anyone can understand regardless of technical background.

Table of Contents

What Is Artificial Intelligence?

Artificial intelligence refers to computer systems designed to perform tasks that typically require human intelligence. These tasks include understanding natural language, recognizing patterns in images, making decisions based on data, solving complex problems, and learning from experience.

Unlike traditional computer programs that follow explicit instructions coded by programmers, AI systems learn patterns from data and improve their performance over time. A traditional program follows rigid "if-then" rules, while an AI system adapts and refines its approach based on outcomes and new information.

The "intelligence" in artificial intelligence encompasses several capabilities including perception (understanding sensory inputs like images or speech), reasoning (drawing logical conclusions from information), learning (improving performance through experience), problem-solving (finding solutions to complex challenges), and language understanding (processing and generating human communication).

AI systems exist on a spectrum from narrow applications performing specific tasks to the theoretical goal of artificial general intelligence matching human cognitive abilities across all domains. Every AI application in use today represents narrow AI—highly capable within defined boundaries but unable to transfer capabilities to different tasks without retraining.

The term "artificial intelligence" was coined in 1956 at the Dartmouth Conference where researchers gathered to explore whether machines could simulate human intelligence. While early AI research pursued symbolic reasoning and hand-coded knowledge, modern AI achieves results primarily through machine learning from massive datasets.

A Brief History of AI

The Foundational Era (1950s-1970s)

AI emerged as a formal field in 1956 when John McCarthy organized the Dartmouth Conference, bringing together researchers to explore machine intelligence. Early AI research pursued symbolic AI where programmers encoded human knowledge and reasoning rules into computer systems.

Notable achievements included Arthur Samuel's checkers program that learned to play competitively in 1959, Joseph Weizenbaum's ELIZA chatbot demonstrating natural language processing in 1966, and early expert systems capturing specialist knowledge in narrow domains.

The period also experienced the first "AI winter" in the 1970s as initial optimism met practical limitations. Computers lacked processing power for ambitious goals, funding dried up after unmet promises, and the complexity of human intelligence proved greater than anticipated.

The Knowledge-Based Systems Era (1980s-1990s)

AI revived in the 1980s through expert systems—programs encoding domain specialist knowledge for applications like medical diagnosis and financial analysis. Companies invested billions in AI research and commercialization, viewing the technology as competitive advantage.

However, limitations of rule-based approaches became apparent. Expert systems required extensive manual knowledge encoding, struggled with uncertainty and ambiguity, couldn't learn from experience, and proved brittle when encountering unexpected situations. Another AI winter followed as commercial applications underdelivered.

The Machine Learning Revolution (1997-2012)

The field shifted toward machine learning—algorithms that learn patterns from data rather than following hand-coded rules. Key milestones included IBM's Deep Blue defeating world chess champion Garry Kasparov in 1997 and IBM Watson winning Jeopardy in 2011.

Increasing computational power, growing datasets from internet expansion, and algorithmic improvements in machine learning made data-driven approaches viable. The success of statistical methods over symbolic reasoning fundamentally redirected AI research.

The Deep Learning Era (2012-2020)

Deep learning—neural networks with many layers trained on massive datasets—revolutionized AI starting with AlexNet's 2012 breakthrough in image recognition. The approach achieved dramatic improvements in computer vision, speech recognition, natural language processing, and game playing.

Major milestones included Google's AlphaGo defeating world Go champion Lee Sedol in 2016, demonstrating superhuman performance in the ancient strategy game. Voice assistants like Siri, Alexa, and Google Assistant brought AI into everyday consumer use.

Companies invested billions in AI research and development. Google, Facebook, Amazon, Microsoft, and Chinese tech giants built massive AI research teams. The period established deep learning as the dominant AI paradigm.

The Generative AI Era (2020-Present)

Large language models and generative AI emerged as the latest breakthrough. OpenAI's GPT-3 in 2020 demonstrated unprecedented language capabilities, followed by ChatGPT in November 2022 bringing generative AI to mainstream awareness.

The release of ChatGPT triggered an AI gold rush with companies racing to develop competitive models. Google Gemini, Claude, and numerous other platforms followed, while image generators like Midjourney and DALL-E democratized visual content creation.

By 2025, generative AI capabilities span text, images, video, audio, and code. The technology increasingly integrates into productivity tools, creative applications, and business workflows worldwide.

How Artificial Intelligence Works

Data: The Foundation of Modern AI

Modern AI systems learn from data rather than following explicitly programmed rules. Training data provides examples from which AI algorithms extract patterns, relationships, and rules automatically.

An AI system learning to identify cats in photos trains on thousands of labeled cat images. The system analyzes visual patterns distinguishing cats from other objects—pointy ears, whiskers, facial structure, body shape. After training, the system recognizes cats in new photos it hasn't seen before.

The quality and quantity of training data directly determines AI system capability. More diverse, accurate, and comprehensive data produces more capable AI. Biased, limited, or low-quality data results in flawed AI systems with poor performance.

Algorithms: How AI Learns

AI algorithms are mathematical procedures enabling computers to learn from data. Different algorithm types suit different problems and data types.

Machine learning algorithms include supervised learning where systems learn from labeled examples, unsupervised learning finding patterns in unlabeled data, and reinforcement learning improving through trial and error based on rewards and penalties.

Deep learning uses neural networks—computational structures inspired by biological brains with layers of interconnected nodes processing information. Deep neural networks with many layers can learn extremely complex patterns from raw data.

Training: Teaching AI Systems

Training involves exposing AI algorithms to data repeatedly, allowing systems to adjust internal parameters to improve performance. During training, the system makes predictions, compares predictions to correct answers, calculates errors, and adjusts to reduce future errors.

Training large AI models requires substantial computational resources. ChatGPT and similar systems train on thousands of GPUs for weeks or months, processing trillions of text tokens. The computational investment enables capabilities impossible with smaller-scale training.

After training, AI systems enter deployment where they process new inputs and generate predictions or outputs. Deployed systems continue learning in some cases, improving through additional data and user interactions.

Inference: AI Systems in Action

Inference describes AI systems processing new inputs to generate outputs after training completes. When you ask ChatGPT a question, the system performs inference—applying learned patterns to generate relevant responses.

Inference requires less computational power than training but still demands significant resources for large models. Optimizations reduce inference costs through techniques like model compression, quantization, and efficient serving infrastructure.

The training-inference separation means AI systems don't learn during normal use. Updates require retraining on new data, though some systems implement online learning adapting gradually during deployment.

Types of Artificial Intelligence

By Capability Level

Narrow AI (Weak AI) performs specific tasks within defined domains but cannot transfer capabilities to different tasks. Every AI application in use today represents narrow AI including voice assistants, recommendation algorithms, image recognition, and language translation.

Narrow AI can exceed human performance within its specialty—AI beats humans at chess, Go, and certain medical diagnoses. However, a chess-playing AI cannot recognize images or write emails despite superhuman chess performance.

Artificial General Intelligence (AGI) would match human cognitive abilities across all domains, learning new tasks as flexibly as humans. AGI remains theoretical with no clear timeline for achievement. Researchers debate whether current approaches will lead to AGI or whether fundamental breakthroughs are necessary.

Artificial Superintelligence (ASI) would surpass human intelligence across all domains. This theoretical concept raises profound questions about control, alignment, and societal impact. ASI remains speculative, though some researchers consider it an eventual possibility if AGI is achieved.

By Functionality

Reactive Machines respond to inputs with predetermined outputs without memory or learning capability. IBM's Deep Blue chess computer exemplifies reactive AI—highly capable at chess but unable to learn new games or remember past matches.

Limited Memory AI learns from historical data to inform decisions. Most modern AI falls into this category including self-driving cars learning from driving data, recommendation systems using past preferences, and chatbots drawing on conversation history.

Theory of Mind AI would understand that other entities have thoughts, emotions, and intentions—a capability current AI lacks. This theoretical AI type would enable more natural social interaction and collaboration with humans.

Self-Aware AI would possess consciousness and self-awareness. This remains entirely theoretical and controversial, with debate about whether machine consciousness is possible or meaningful.

By Application Domain

Machine Learning systems learn patterns from data to make predictions or decisions without explicit programming for specific outcomes.

Deep Learning uses multi-layer neural networks to learn complex patterns from raw data, powering advances in computer vision, natural language processing, and speech recognition.

Natural Language Processing (NLP) enables computers to understand, interpret, and generate human language. Applications include chatbots, translation, sentiment analysis, and text summarization.

Computer Vision allows computers to derive information from images and videos. Uses include facial recognition, medical image analysis, autonomous vehicles, and quality inspection.

Robotics AI combines perception, reasoning, and physical manipulation to enable robots to operate in complex, dynamic environments. Applications span manufacturing, healthcare, exploration, and service industries.

AI vs Machine Learning vs Deep Learning

These related terms often create confusion. Understanding their relationship clarifies how modern AI actually works.

Artificial Intelligence represents the broadest category—any computer system performing tasks requiring human-like intelligence. AI encompasses all approaches to creating intelligent machines including rule-based systems, machine learning, and other techniques.

Machine Learning is a subset of AI where systems learn from data rather than following explicitly programmed rules. Machine learning algorithms improve automatically through experience, discovering patterns humans might not recognize or articulate.

Deep Learning is a subset of machine learning using neural networks with multiple layers. Deep learning excels at processing unstructured data like images, audio, and text, powering recent AI breakthroughs.

The relationship forms a hierarchy: AI contains machine learning, which contains deep learning. All deep learning is machine learning, but not all machine learning is deep learning. All machine learning is AI, but not all AI uses machine learning.

Traditional AI used rule-based systems where programmers coded specific instructions. Modern AI relies primarily on machine learning and deep learning, which discover patterns from data automatically. This shift enabled AI systems to tackle problems too complex for hand-coded rules.

Example Comparison:

Traditional AI: Rules programmed to identify spam emails based on specific words and patterns

Machine Learning: Algorithm learning spam patterns from examples of spam and legitimate emails

Deep Learning: Neural network analyzing email text, sender patterns, and contextual signals to identify spam with high accuracy

The terms are sometimes used interchangeably in casual conversation, though they have distinct technical meanings. When someone says "AI" today, they typically mean machine learning or deep learning rather than older rule-based approaches.

Real-World AI Applications

AI has moved from research labs into everyday applications affecting billions of people. Understanding where AI operates reveals its practical impact and future trajectory.

Consumer Applications

Virtual Assistants like Siri, Alexa, and Google Assistant use natural language processing to understand voice commands, answer questions, control smart home devices, and manage tasks. These systems combine speech recognition, language understanding, and knowledge retrieval.

Social Media platforms employ AI extensively for content recommendation, identifying what posts users will engage with, facial recognition in photo tagging, content moderation detecting policy violations, and targeted advertising matching ads to user interests.

Streaming Services like Netflix, Spotify, and YouTube use recommendation algorithms analyzing viewing history, preferences, and behavior patterns to suggest content. The personalization drives user engagement and satisfaction.

Navigation Apps like Google Maps and Waze leverage AI for traffic prediction, route optimization, estimated arrival times, and real-time rerouting based on current conditions.

Smartphone Features incorporate AI for photography enhancement, face unlock, predictive text, battery optimization, and app suggestions based on usage patterns.

Business and Enterprise

Customer Service chatbots and virtual agents handle inquiries 24/7, answering common questions, troubleshooting problems, and escalating complex issues to human agents. Companies report 60-70% autonomous resolution rates with AI customer service.

Marketing and Sales teams use AI for customer segmentation, personalized recommendations, lead scoring, email optimization, content generation, and campaign performance prediction. AI enables personalization at scale previously impossible.

Human Resources applications include resume screening, candidate matching, interview scheduling, employee engagement analysis, and turnover prediction. AI accelerates hiring while reducing bias when properly implemented.

Financial Services deploy AI for fraud detection, credit risk assessment, algorithmic trading, customer service, regulatory compliance, and personalized financial advice. The industry invested heavily in AI for competitive advantage.

Supply Chain and Logistics leverage AI for demand forecasting, inventory optimization, route planning, warehouse automation, and delivery time prediction. Companies achieve significant cost reductions and efficiency gains.

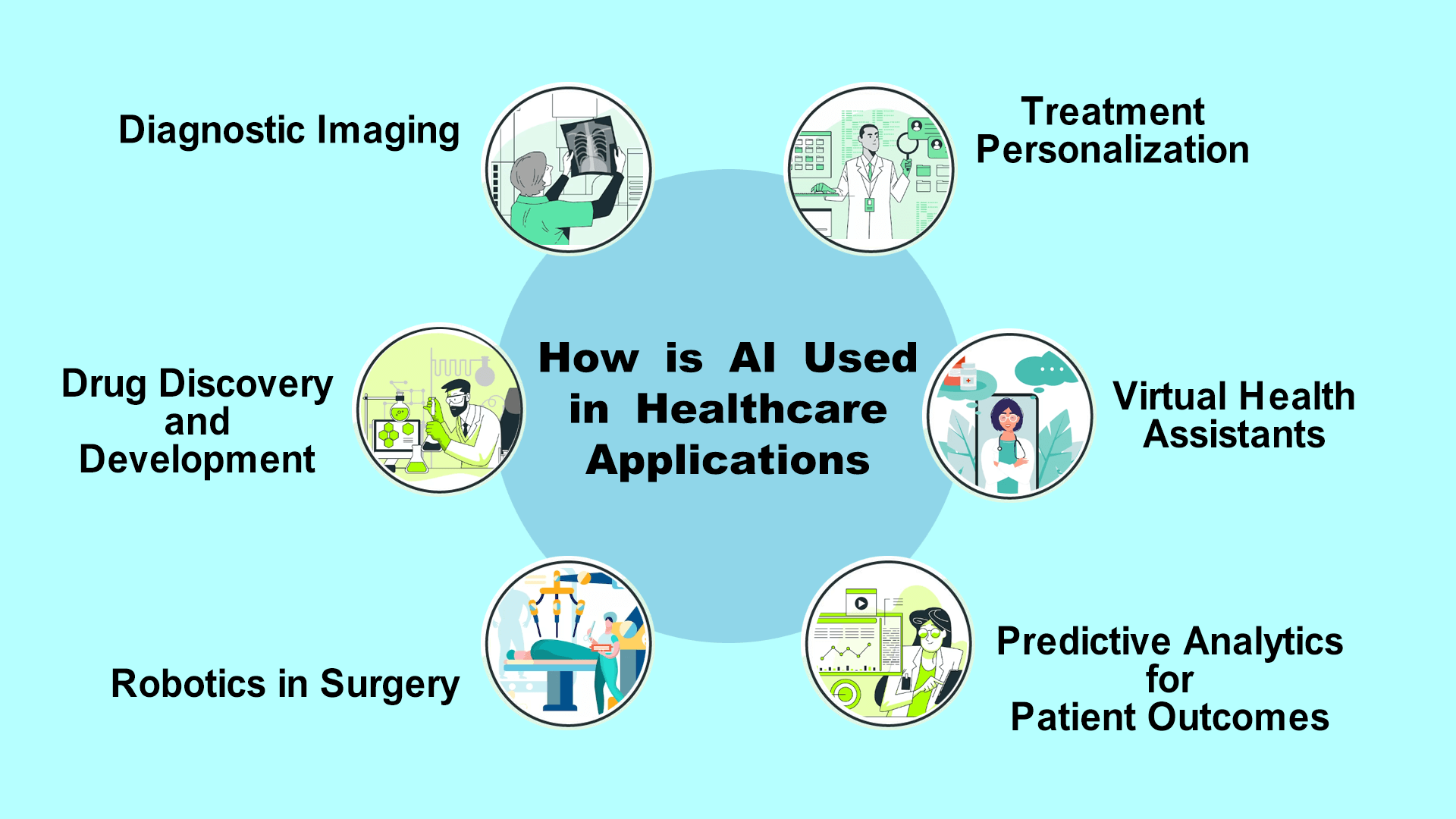

Healthcare and Life Sciences

Medical Diagnosis AI systems analyze medical images, detect diseases, predict patient outcomes, and recommend treatment options. Some AI systems match or exceed specialist accuracy for specific diagnostic tasks.

Drug Discovery uses AI to identify promising drug candidates, predict molecular properties, design new compounds, and optimize clinical trials. AI accelerates discovery processes that traditionally required years.

Personalized Medicine analyzes genetic data, medical history, and lifestyle factors to recommend individualized treatment approaches. AI enables precision medicine tailored to individual patients.

Hospital Operations optimize bed allocation, staff scheduling, supply management, and patient flow. Healthcare systems improve efficiency and reduce costs while maintaining care quality.

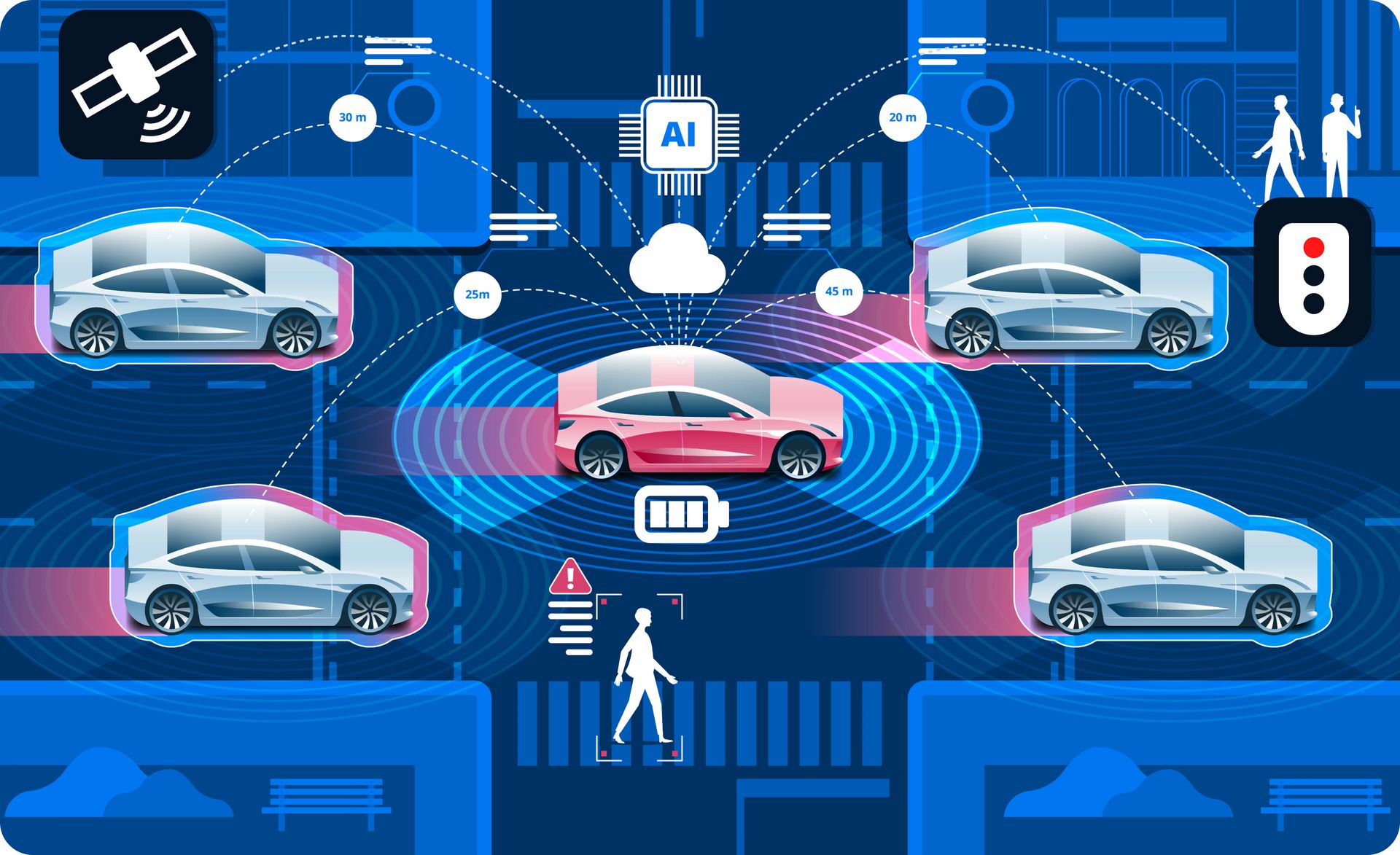

Transportation

Autonomous Vehicles use computer vision, sensor fusion, and decision-making AI to navigate roads, avoid obstacles, and transport passengers. Companies like Waymo and Cruise operate autonomous taxi services in select cities.

Traffic Management systems optimize signal timing, predict congestion, and route vehicles to reduce travel time and emissions. Cities deploy AI for more efficient transportation infrastructure.

Aviation employs AI for flight route optimization, predictive maintenance, autopilot systems, and air traffic management. The technology improves safety and efficiency.

Manufacturing and Industry

Quality Control uses computer vision to inspect products, identify defects, and ensure manufacturing standards with greater consistency than human inspection.

Predictive Maintenance analyzes sensor data to predict equipment failures before they occur, reducing downtime and maintenance costs through proactive repairs.

Process Optimization AI systems adjust production parameters, resource allocation, and scheduling to maximize efficiency, reduce waste, and improve quality.

Robotics increasingly incorporate AI for flexible manufacturing, enabling robots to adapt to variations, learn new tasks, and collaborate safely with human workers.

Education

Personalized Learning platforms adapt content difficulty, pacing, and teaching methods to individual student needs. AI tutors provide instant feedback and explanations tailored to knowledge gaps.

Administrative Automation handles grading, scheduling, enrollment management, and communication. Educators reclaim time for teaching and student interaction.

Accessibility Tools provide real-time transcription, translation, text-to-speech, and adaptive interfaces helping students with disabilities access education.

TABLE 1: AI Applications by Industry

Industry | Primary Applications | Impact | Adoption Rate |

|---|---|---|---|

Consumer Tech | Assistants, recommendations, personalization | Improved UX | 95%+ |

Financial Services | Fraud detection, trading, risk assessment | Cost reduction, accuracy | 85% |

Healthcare | Diagnosis, drug discovery, operations | Better outcomes, efficiency | 65% |

Retail | Recommendations, inventory, pricing | Sales increase, optimization | 75% |

Manufacturing | Quality control, maintenance, optimization | Efficiency, reduced downtime | 70% |

Transportation | Autonomous vehicles, routing, optimization | Safety, efficiency | 60% |

Marketing | Personalization, content, analytics | Conversion improvement | 80% |

Education | Personalized learning, automation | Better outcomes, accessibility | 55% |

Major AI Platforms and Tools

Understanding the leading AI platforms helps navigate the rapidly evolving ecosystem and select appropriate tools for different needs.

Conversational AI Platforms

ChatGPT from OpenAI leads consumer AI adoption with 800 million weekly users. The platform excels at general conversation, creative writing, code assistance, and diverse knowledge work. Free and paid tiers ($20/month) provide accessible entry to powerful AI capabilities.

Claude from Anthropic emphasizes safety, nuanced reasoning, and long document analysis. The 200,000 token context window handles extensive documents impossible for competitors. Pricing matches ChatGPT at $20/month for Pro tier.

Google Gemini integrates throughout Google's ecosystem including Search, Workspace, and Android. Real-time web access and multimodal capabilities distinguish Gemini from text-only alternatives. Free and premium ($19.99/month) tiers available.

Perplexity AI specializes in research with automatic source citations and comprehensive web search. The platform targets users requiring factual accuracy and source verification. Free and Pro ($20/month) options available.

Specialized AI Tools

AI Coding Assistants like GitHub Copilot, Cursor, and Replit Ghostwriter accelerate software development through code generation, completion, and debugging. Developers report 30-55% productivity gains.

AI Image Generators including Midjourney, DALL-E, and Stable Diffusion create visual content from text descriptions. The tools democratize visual creation for marketing, design, and creative projects.

AI Video Tools like Runway, Pika, and Synthesia generate video content from text or images. Applications span marketing, education, and creative production.

AI Writing Tools such as Jasper, Copy.ai, and Writesonic accelerate content creation for marketing, blogs, and business communications.

Enterprise AI Platforms

Major cloud providers offer comprehensive AI services including Amazon Web Services (AWS) with SageMaker and various AI services, Microsoft Azure with Azure AI and Cognitive Services, and Google Cloud Platform with Vertex AI and specialized tools.

These platforms provide infrastructure, pre-trained models, custom model training, and deployment capabilities for organizations building AI applications at scale.

Open Source AI

DeepSeek provides open-source models rivaling proprietary alternatives with MIT licensing enabling unlimited use and modification. Meta's LLaMA models, Stability AI's Stable Diffusion, and HuggingFace's model repository offer additional open-source options.

Open-source AI enables customization, privacy control, and cost savings for organizations with technical capabilities to deploy and manage models independently.

Benefits and Limitations of AI

Understanding both AI's advantages and constraints enables realistic expectations and effective deployment strategies.

Key Benefits

Productivity Enhancement represents AI's most immediate value. Tasks requiring hours of human effort complete in seconds with AI assistance. Content creation, data analysis, coding, and research accelerate dramatically with AI augmentation.

24/7 Availability enables AI systems to operate continuously without fatigue, breaks, or downtime. Customer service chatbots handle inquiries around the clock. Monitoring systems never miss alerts. Processing continues regardless of time zones or holidays.

Scalability allows AI to handle volume increases without proportional resource growth. Systems serving one user scale to millions without fundamental redesign. This characteristic enables small teams to accomplish what previously required large organizations.

Consistency in AI output quality exceeds human performance for many tasks. AI doesn't have bad days, get distracted, or vary quality based on mood. Repetitive tasks receive identical attention regardless of volume or duration.

Pattern Recognition in massive datasets surpasses human capability. AI identifies subtle correlations, anomalies, and trends invisible to human analysis. Medical diagnosis, fraud detection, and predictive maintenance benefit from superhuman pattern recognition.

Cost Reduction emerges as AI automates tasks previously requiring human labor. Organizations report 40-70% cost savings in specific workflows while maintaining or improving quality. The economics particularly favor high-volume, repetitive applications.

Democratization of capabilities enables non-experts to achieve professional results. Non-designers create marketing visuals. Non-programmers build functional applications. Non-writers produce compelling copy. AI lowers barriers to capability previously requiring years of training.

Significant Limitations

Accuracy and Hallucination issues persist across AI systems. Even advanced models confidently generate incorrect information, fabricate sources, and make logical errors. High-stakes applications require human verification rather than blind trust in AI outputs.

Lack of True Understanding means AI systems manipulate patterns without genuine comprehension. Systems don't "know" what they're doing in meaningful sense—they apply statistical patterns learned from training data. This limitation affects reasoning, common sense, and handling edge cases.

Bias and Fairness concerns arise because AI systems learn from data reflecting societal biases. Models may perpetuate or amplify discrimination in hiring, lending, criminal justice, and other domains. Responsible deployment requires bias testing and mitigation.

Data Dependencies create vulnerabilities. AI quality cannot exceed training data quality. Biased, incomplete, or outdated data produces flawed AI. Data requirements also raise privacy concerns as training demands massive information collection.

Computational Costs remain substantial despite efficiency improvements. Training large models requires millions of dollars in compute resources. Inference costs for serving billions of requests accumulate quickly. Environmental impact from energy consumption deserves consideration.

Limited Creativity constrains AI to recombining patterns from training data rather than generating truly novel ideas. While AI produces impressive outputs, the creativity represents sophisticated pattern matching rather than genuine innovation.

Explainability Challenges make AI decision-making opaque. Complex neural networks function as black boxes where even creators cannot fully explain specific outputs. This opacity creates problems for regulated industries, critical applications, and trust building.

Security Vulnerabilities include adversarial attacks manipulating AI behavior, data poisoning corrupting training data, model theft extracting proprietary models, and prompt injection exploiting language model weaknesses.

Job Displacement concerns are real as AI automates tasks previously requiring human workers. While AI creates new opportunities, transition periods create hardship for displaced workers requiring retraining and adaptation.

The Future of Artificial Intelligence

AI development continues accelerating with several clear trends shaping the technology's trajectory through 2025 and beyond.

Multimodal AI Integration

AI systems increasingly process and generate multiple data types simultaneously—text, images, video, audio, and sensor data. Multimodal AI enables more natural interaction and sophisticated understanding resembling human multisensory perception.

Applications will seamlessly combine capabilities currently requiring separate specialized tools. Single systems will analyze documents containing text and images, generate presentations with coordinated visuals and narration, and understand video content including visual, audio, and textual elements.

Agentic AI Systems

AI agents that autonomously plan, execute, and adapt to accomplish complex goals represent the next evolution beyond simple query-response systems. These agents will break down objectives, use tools, make decisions, and iterate toward solutions with minimal human guidance.

Business applications will delegate entire projects to AI agents that research, plan, create deliverables, and incorporate feedback. Personal AI assistants will manage schedules, communications, and tasks proactively rather than just responding to commands.

Improved Reasoning and Reliability

Current AI limitations in logical reasoning, mathematical problem-solving, and factual accuracy will diminish through architectural innovations, training improvements, and integration with external tools and knowledge bases.

Next-generation models will make fewer factual errors, demonstrate stronger logical consistency, and handle complex multi-step reasoning more reliably. These improvements are crucial for deploying AI in high-stakes applications requiring accuracy.

Personalization at Scale

AI systems will become increasingly personalized to individual users, learning preferences, communication styles, knowledge levels, and goals. Personal AI will understand context from long interaction histories rather than treating each conversation as isolated.

The personalization will extend across devices and services, creating continuity in AI assistance throughout daily life. Privacy-preserving personalization techniques will enable customization without centralizing sensitive personal data.

Edge AI Deployment

AI processing will increasingly occur on local devices rather than exclusively in cloud data centers. Edge AI provides faster responses, improved privacy, offline functionality, and reduced bandwidth requirements.

Smartphones, wearables, vehicles, and IoT devices will incorporate sophisticated AI capabilities operating independently of internet connectivity. This shift enables new applications while addressing privacy and latency concerns.

Regulation and Governance

Government regulation of AI will expand significantly through comprehensive frameworks in the EU (AI Act), sector-specific rules in the U.S., and varying approaches globally. Regulations will address safety testing, bias mitigation, transparency requirements, liability frameworks, and prohibited applications.

Industry self-governance through safety commitments, ethical guidelines, and responsible development practices will complement legal regulation. Organizations will face increasing pressure to demonstrate responsible AI deployment.

Open Source vs Proprietary Tension

The balance between open-source and proprietary AI development will continue evolving. Open-source models like DeepSeek and LLaMA challenge commercial closed systems while raising questions about safety, control, and business models.

This tension will shape AI accessibility, research progress, competitive dynamics, and concentration of power in AI development. Policy debates will address whether to favor openness or control for powerful AI systems.

Artificial General Intelligence Timeline

Experts disagree dramatically on AGI timeline ranging from 5-10 years to never. Recent progress in large language models has renewed discussion about paths to AGI, though fundamental questions about requirements for general intelligence remain unresolved.

AGI development, if achieved, would represent discontinuous change in human civilization with profound implications for economy, society, and human purpose. The prospect drives both optimism about solving major challenges and concern about existential risks.

Frequently Asked Questions

What is the difference between AI and automation?

Automation executes predefined tasks following specific rules without learning or adapting. AI systems learn from data, adapt to new situations, and handle tasks not explicitly programmed. Traditional automation handles repetitive, rule-based processes while AI tackles complex, variable tasks requiring judgment. Modern systems often combine both—AI makes intelligent decisions while automation executes resulting actions.

Will AI take my job?

AI will transform most jobs rather than eliminate them entirely. Tasks within jobs will automate, but roles will evolve to incorporate AI assistance and focus on uniquely human capabilities like creativity, emotional intelligence, complex judgment, and interpersonal skills. Workers who learn to collaborate effectively with AI will increase their value while those resisting adaptation may face displacement. Continuous learning and adaptation are essential.

Is AI dangerous?

AI presents both risks and benefits. Near-term risks include bias and discrimination in automated decisions, privacy violations from data collection, security vulnerabilities and adversarial attacks, job displacement and economic disruption, and misinformation from AI-generated content. Long-term risks include potential loss of human agency and autonomy, concentration of power in few organizations, and existential risks if AGI is achieved. Responsible development, thoughtful regulation, and ongoing research address these concerns.

How can I learn AI?

Begin with online courses from Coursera, edX, or fast.ai covering machine learning and deep learning fundamentals. Practice using tools like ChatGPT for daily tasks to understand capabilities and limitations. For technical depth, learn Python programming and machine learning frameworks like TensorFlow or PyTorch. Work on practical projects applying AI to problems you care about. Join AI communities and read research papers to stay current with developments.

Do I need to code to use AI?

No. Many AI tools require no coding including ChatGPT and other chatbots for conversation and content creation, no-code AI platforms for building applications, and AI features integrated in software like Microsoft Copilot or Google Workspace. However, coding skills unlock greater capabilities through customization, integration with other systems, building custom AI applications, and understanding how AI works. Non-technical users can leverage AI effectively without coding.

How much does AI cost?

Costs vary dramatically. Free options include ChatGPT, Google Gemini, Perplexity AI, and various other platforms with limited features. Consumer subscriptions range from $10-30 monthly for tools like ChatGPT Plus, Claude Pro, or specialized applications. Enterprise deployments cost $100-500+ per user monthly depending on capabilities and support. Organizations building custom AI face infrastructure costs from thousands to millions of dollars. Start with free tiers to evaluate value before upgrading.

Is AI creative?

AI generates novel outputs including writing, art, music, and designs that humans perceive as creative. However, AI creativity represents sophisticated pattern recombination from training data rather than genuine innovation or consciousness. AI cannot create truly new concepts beyond its training data scope. The outputs prove valuable for creative work while lacking the intentionality, emotional depth, and conceptual breakthroughs characterizing human creativity. AI augments human creativity rather than replicating it.

What data does AI collect about me?

AI systems collect conversation histories and prompts, usage patterns and preferences, feedback and ratings, and potentially uploaded documents or images. Data use varies by platform—some use interactions for model improvement while others maintain stricter privacy. Read privacy policies carefully, avoid sharing sensitive personal information, use privacy-focused alternatives when appropriate, and regularly review and delete data when platforms allow. Enterprise AI with privacy guarantees exists for sensitive applications.

Conclusion

Artificial intelligence has transitioned from theoretical concept to practical technology reshaping virtually every aspect of modern life. The systems powering today's AI bear little resemblance to early rule-based approaches, instead learning complex patterns from data through machine learning and deep learning techniques that enable unprecedented capabilities.

Understanding AI matters because the technology increasingly influences career trajectories, business strategies, educational approaches, and societal development. AI literacy—knowing what AI can and cannot do, where it operates, and how to use it effectively—becomes as fundamental as computer literacy in previous decades.

The AI available in 2025 represents narrow intelligence excelling at specific tasks but lacking general human-like cognition. Every application from ChatGPT to autonomous vehicles to medical diagnosis AI operates within defined boundaries. The question of whether artificial general intelligence matching human cognitive flexibility is achievable remains open with passionate arguments on both sides.

For individuals, AI creates both opportunities and challenges. Those who embrace AI as collaborative tool amplifying human capabilities position themselves for success in AI-augmented careers. Resistance to AI adoption risks displacement as organizations and competitors leverage productivity advantages. The key lies in focusing on uniquely human capabilities—creativity, emotional intelligence, complex judgment, ethical reasoning—while delegating routine tasks to AI systems.

For organizations, AI deployment requires balancing capability adoption with responsible implementation. The technology delivers genuine business value through productivity gains, cost reductions, and new capabilities. However, rushed deployment without considering bias, privacy, security, and human impact creates risks. Successful AI adoption combines technical implementation with change management, ethical frameworks, and continuous evaluation.

The future trajectory of AI remains uncertain in specifics but clear in direction—continued capability improvements, broader adoption, deeper integration into daily life, and growing influence on economy and society. Whether this leads to utopian abundance or dystopian control depends on choices made by technologists, policymakers, businesses, and society collectively.

What remains certain is that AI will continue advancing and influencing how we work, learn, create, and interact. Understanding the technology—its capabilities, limitations, applications, and implications—enables informed participation in shaping that AI-influenced future rather than being shaped by forces we don't understand.

The AI revolution is not coming—it's here. The question is not whether to engage with AI but how to do so thoughtfully, effectively, and responsibly to create outcomes benefiting humanity broadly rather than concentrating benefits narrowly.